Cross-platform adaptation in event-based perception is crucial for deploying event cameras across diverse settings, such as Vehicles, Drones, and Quadrupeds, each with unique motion dynamics, viewpoints, and class distributions.

In this work, we introduce EventFly, a framework for robust cross-platform adaptation in event camera perception.

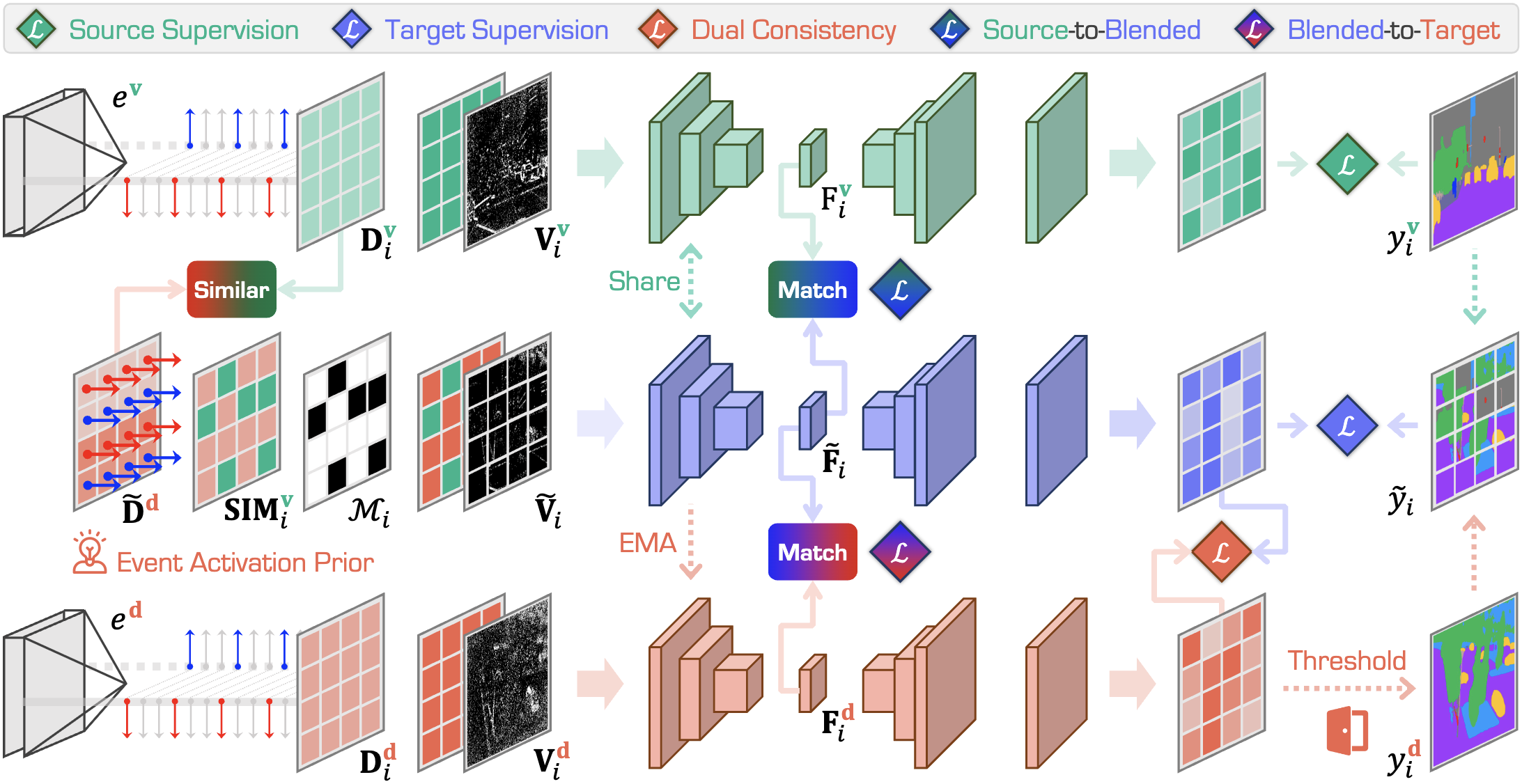

Our approach comprises three key components:

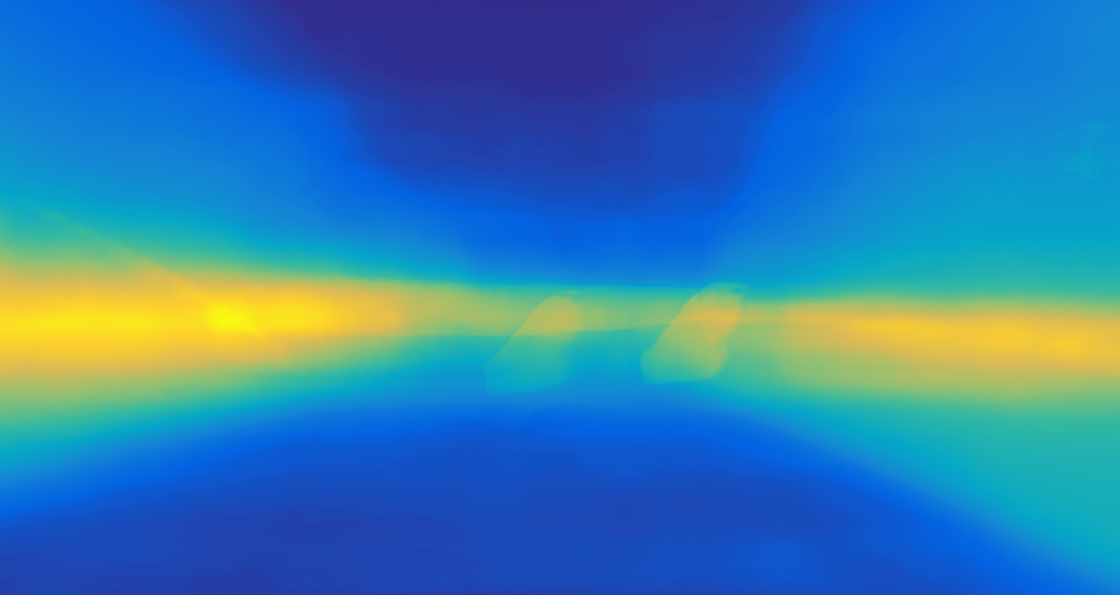

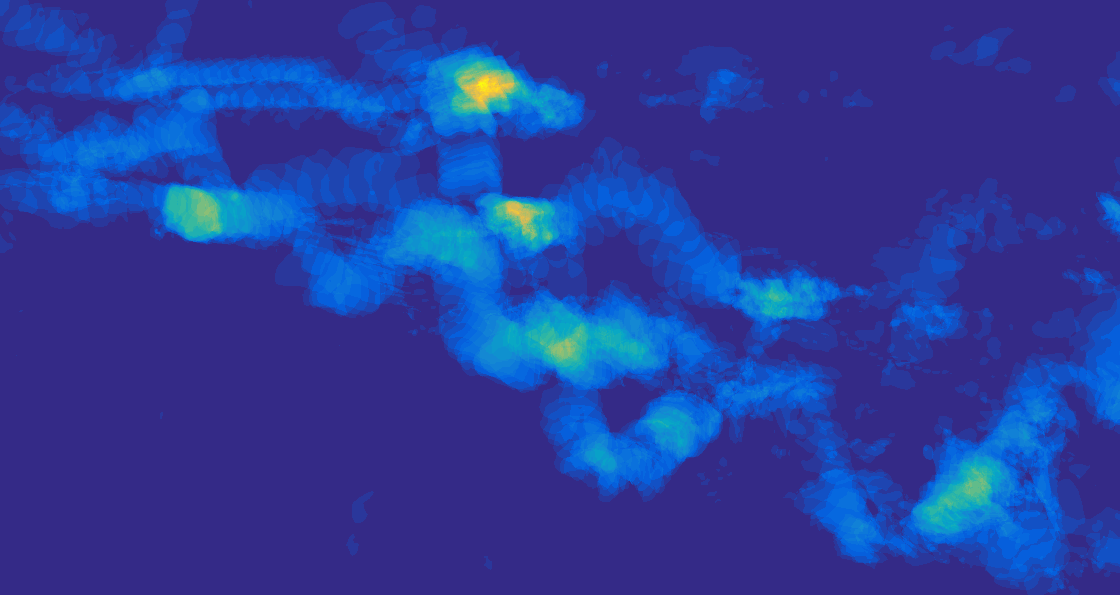

(i) Event Activation Prior (EAP), which identifies high-activation regions in the target domain to minimize prediction entropy, fostering confident, domain-adaptive predictions;

(ii) EventBlend, a data-mixing strategy that integrates source and target event voxel grids based on EAP-driven similarity and density maps, enhancing feature alignment;

and (iii) EventMatch, a dual-discriminator technique that aligns features from source, target, and blended domains for better domain-invariant learning.

To holistically assess cross-platform adaptation abilities, we introduce EXPo, a large-scale benchmark with diverse samples across vehicle, drone, and quadruped platforms.

Extensive experiments validate our effectiveness, demonstrating substantial gains over popular adaptation methods. We hope this work can pave the way for adaptive, high-performing event perception across diverse and complex environments.

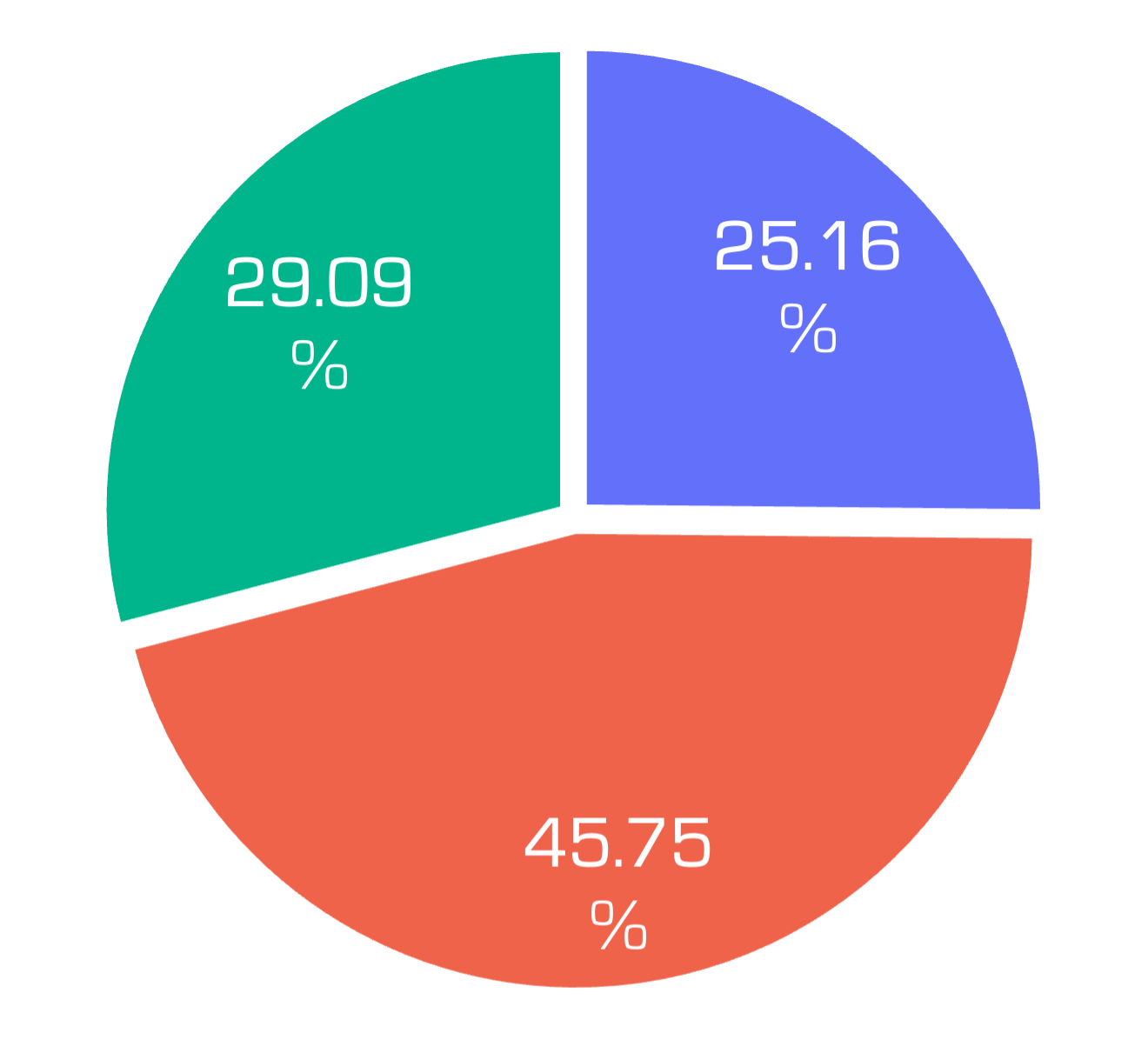

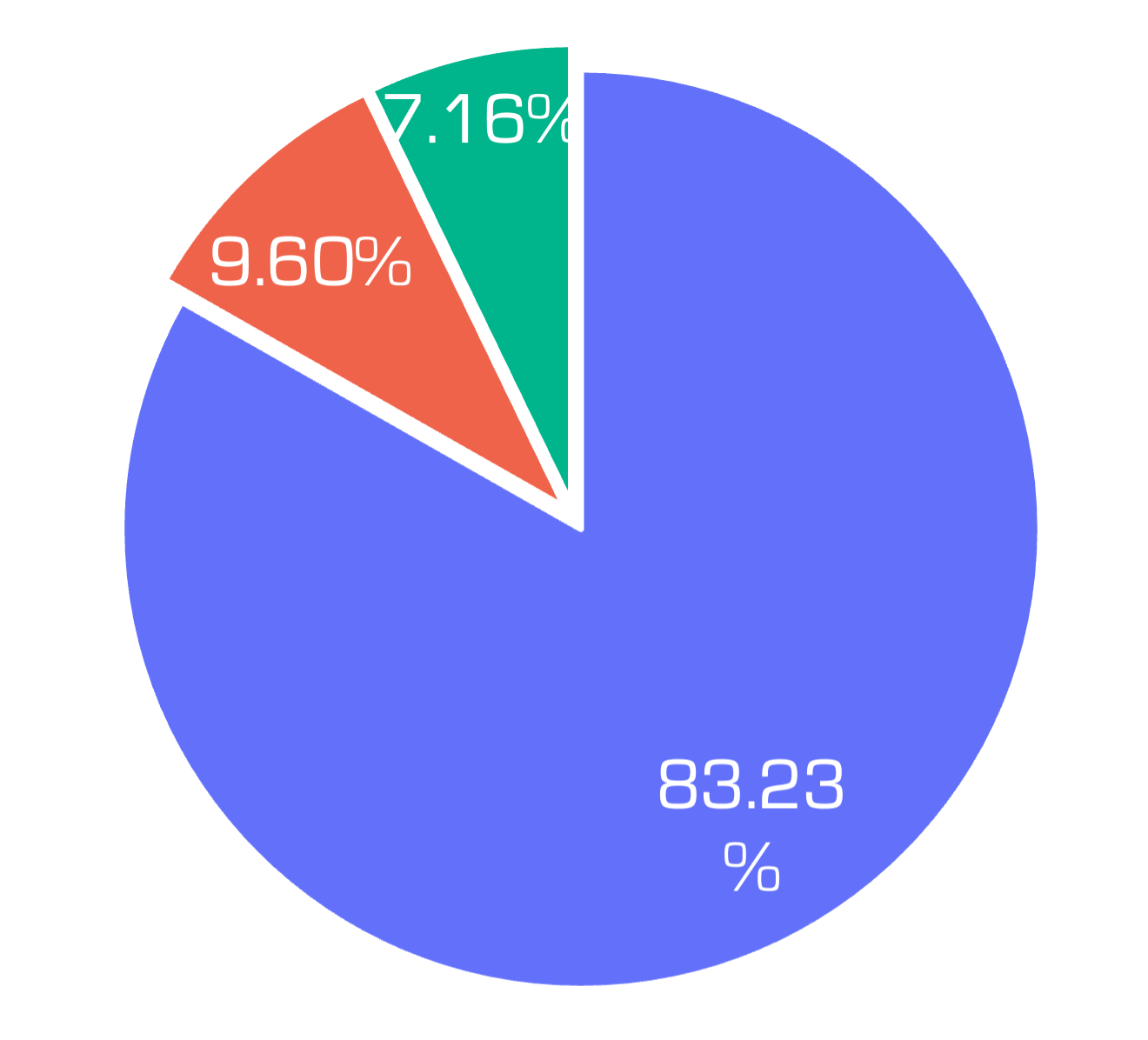

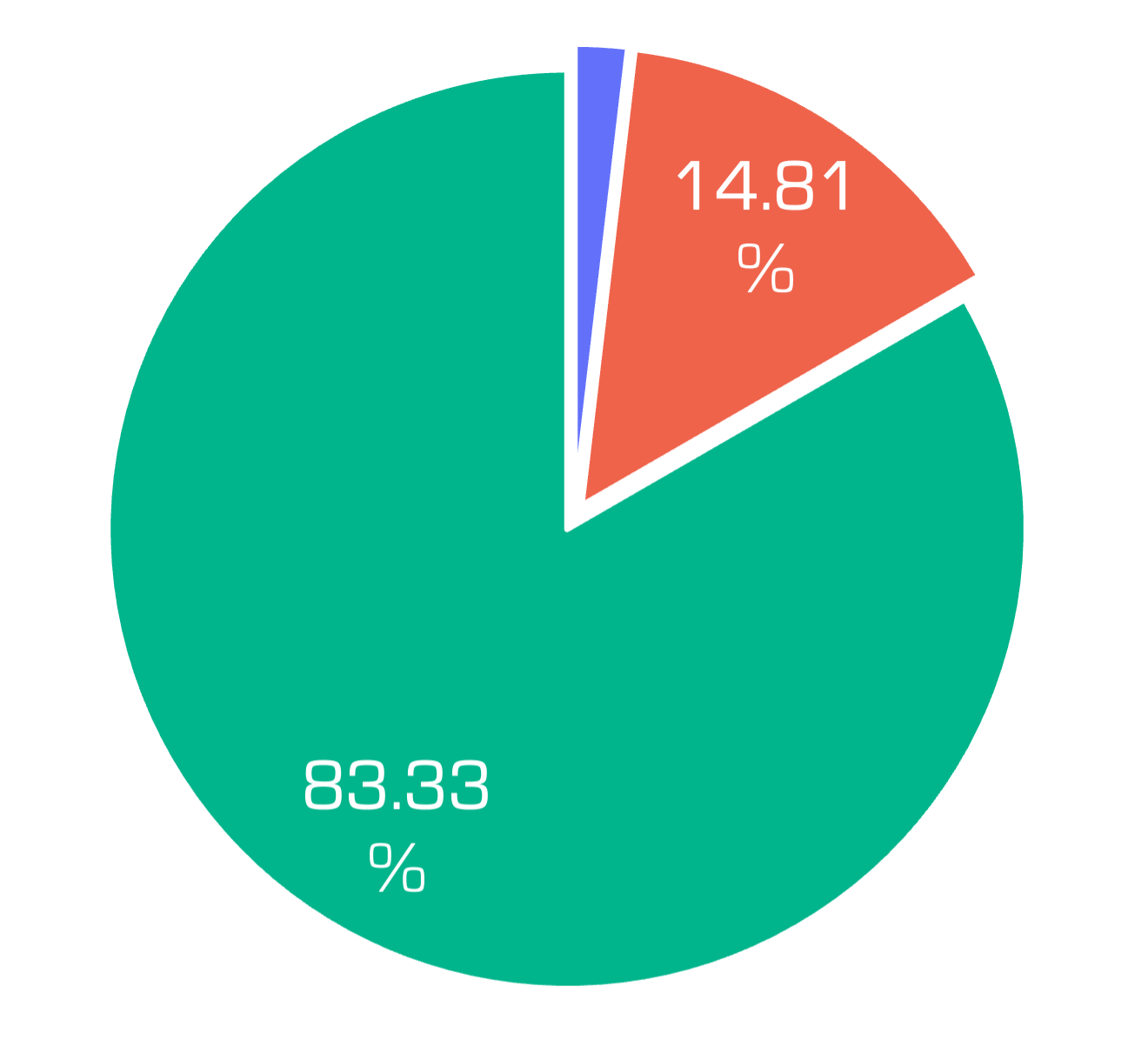

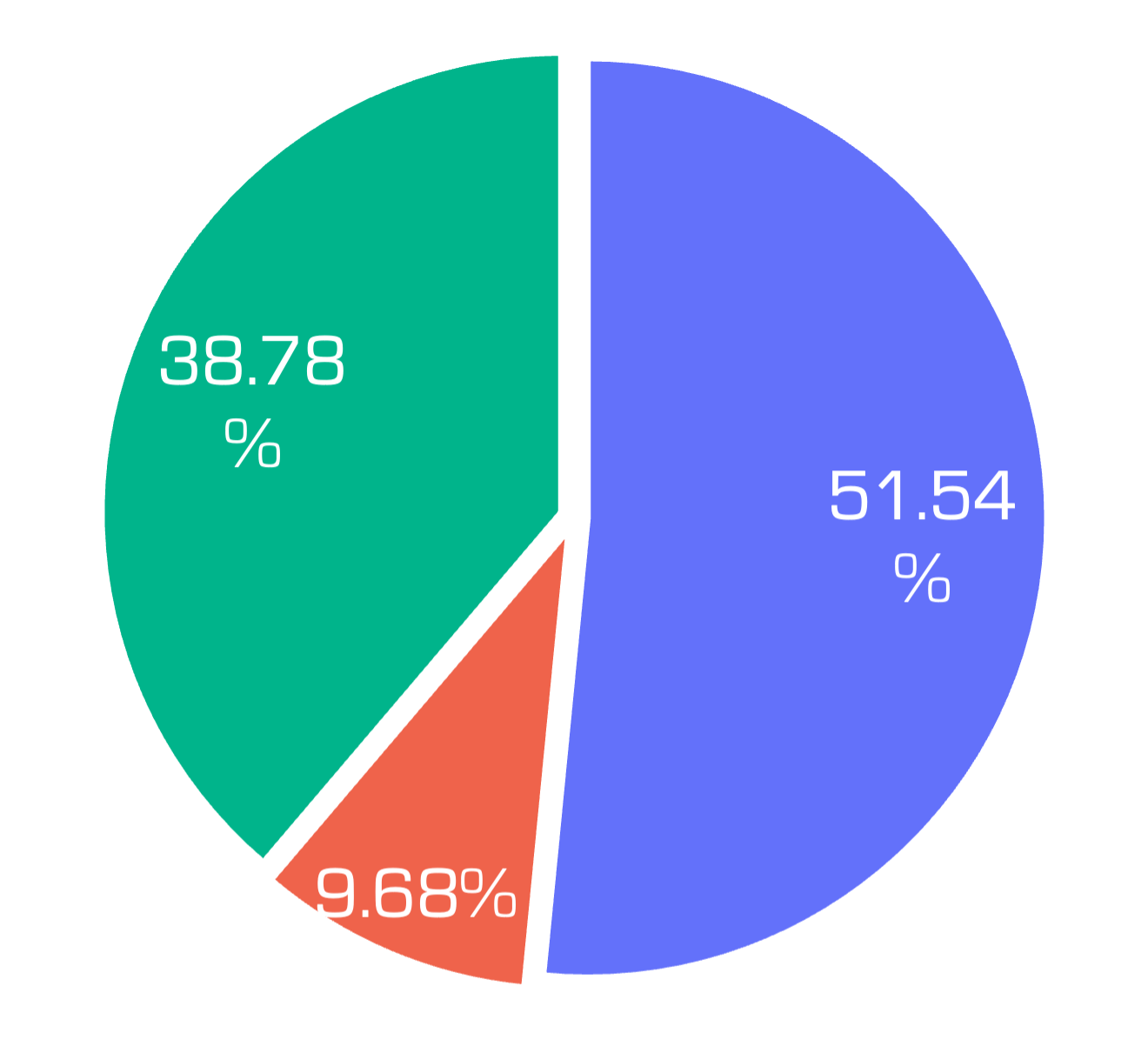

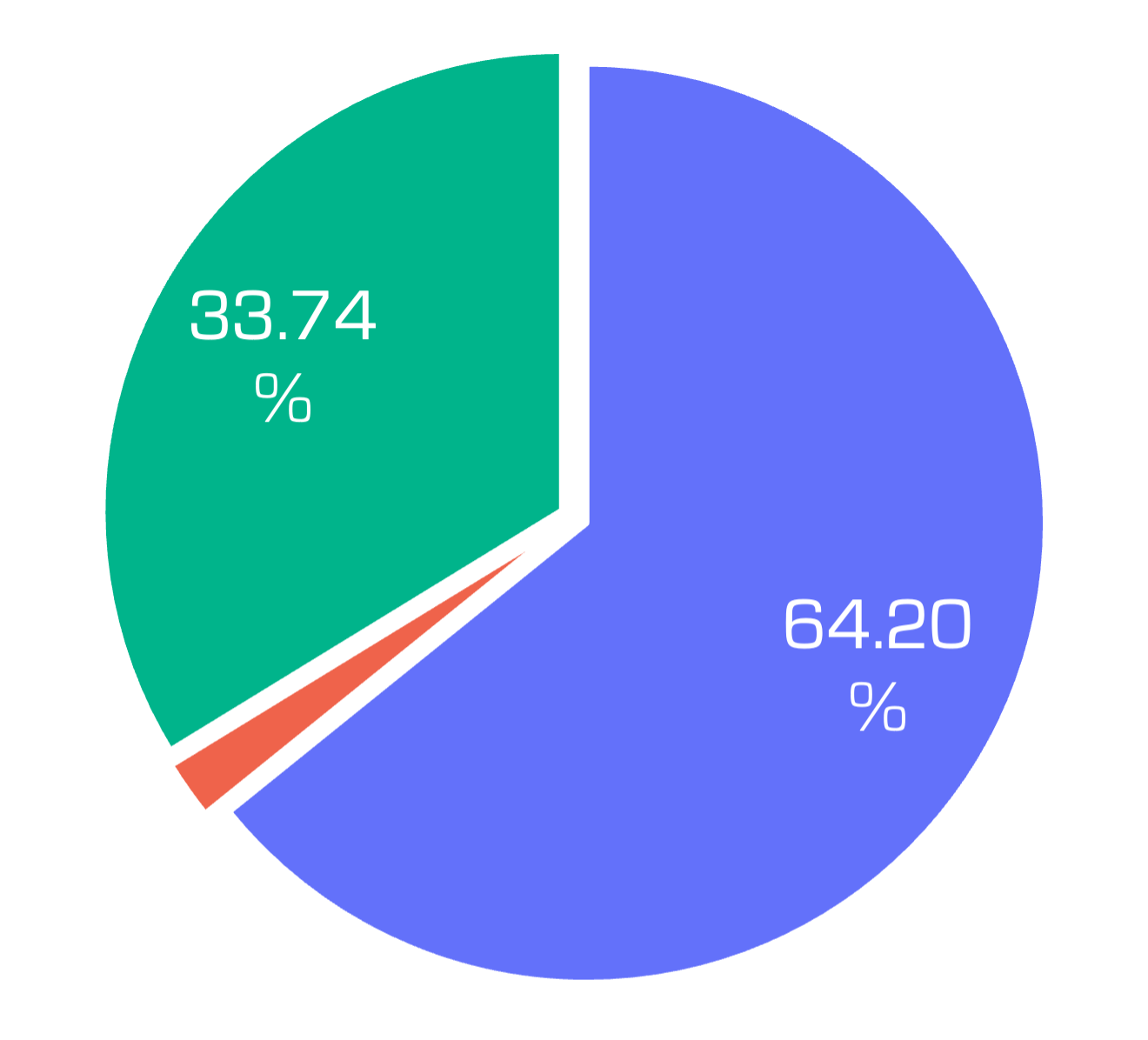

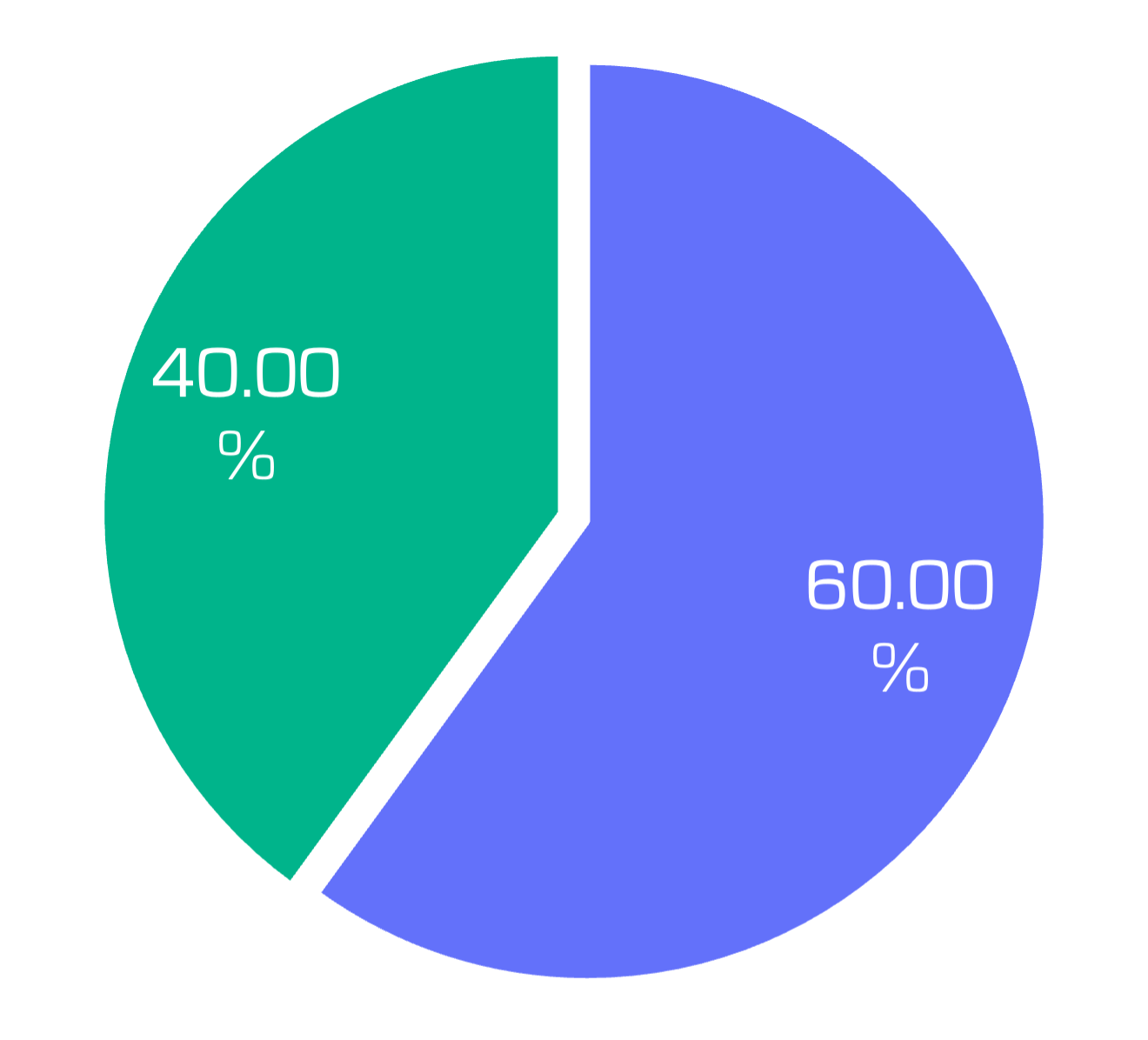

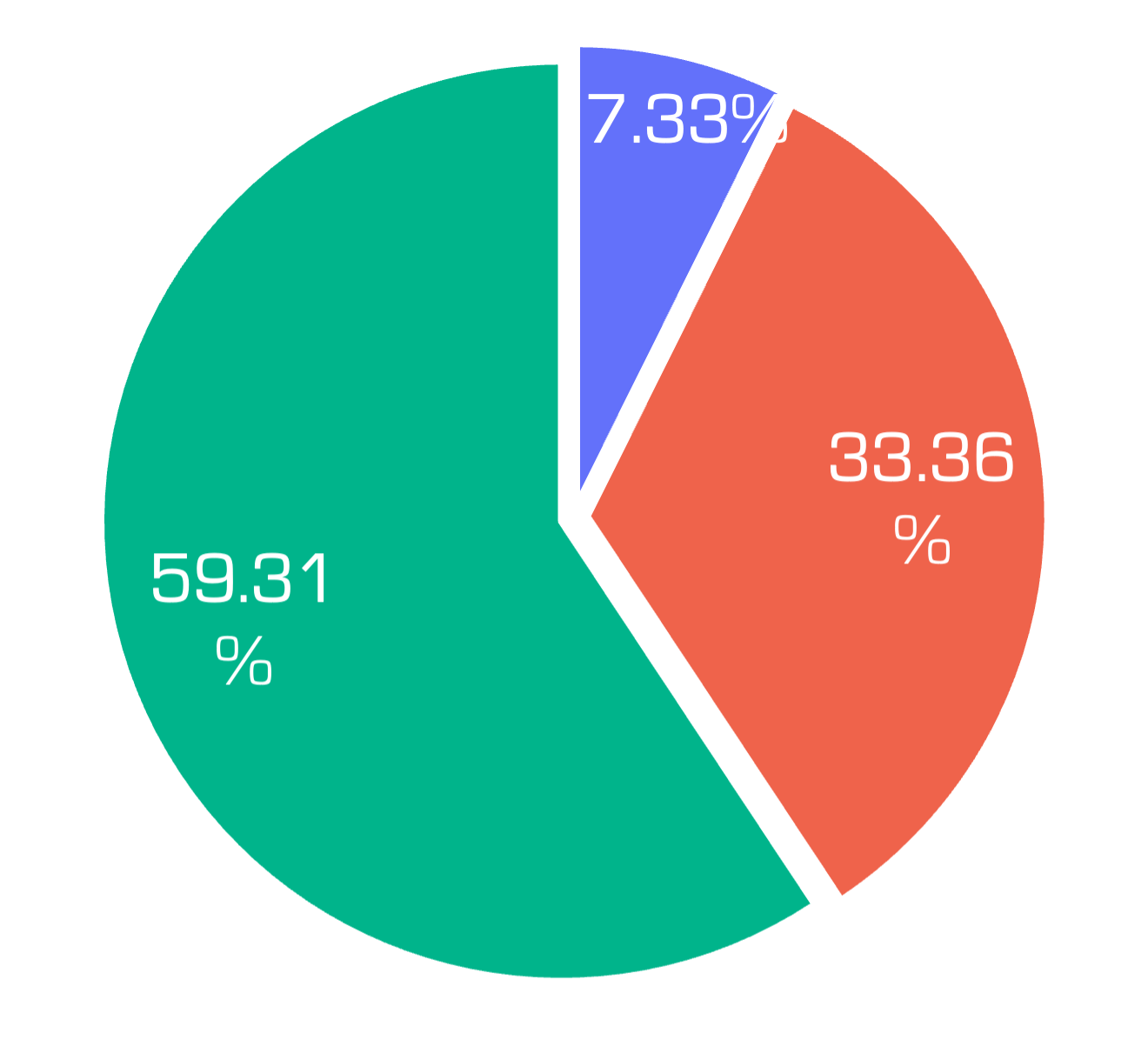

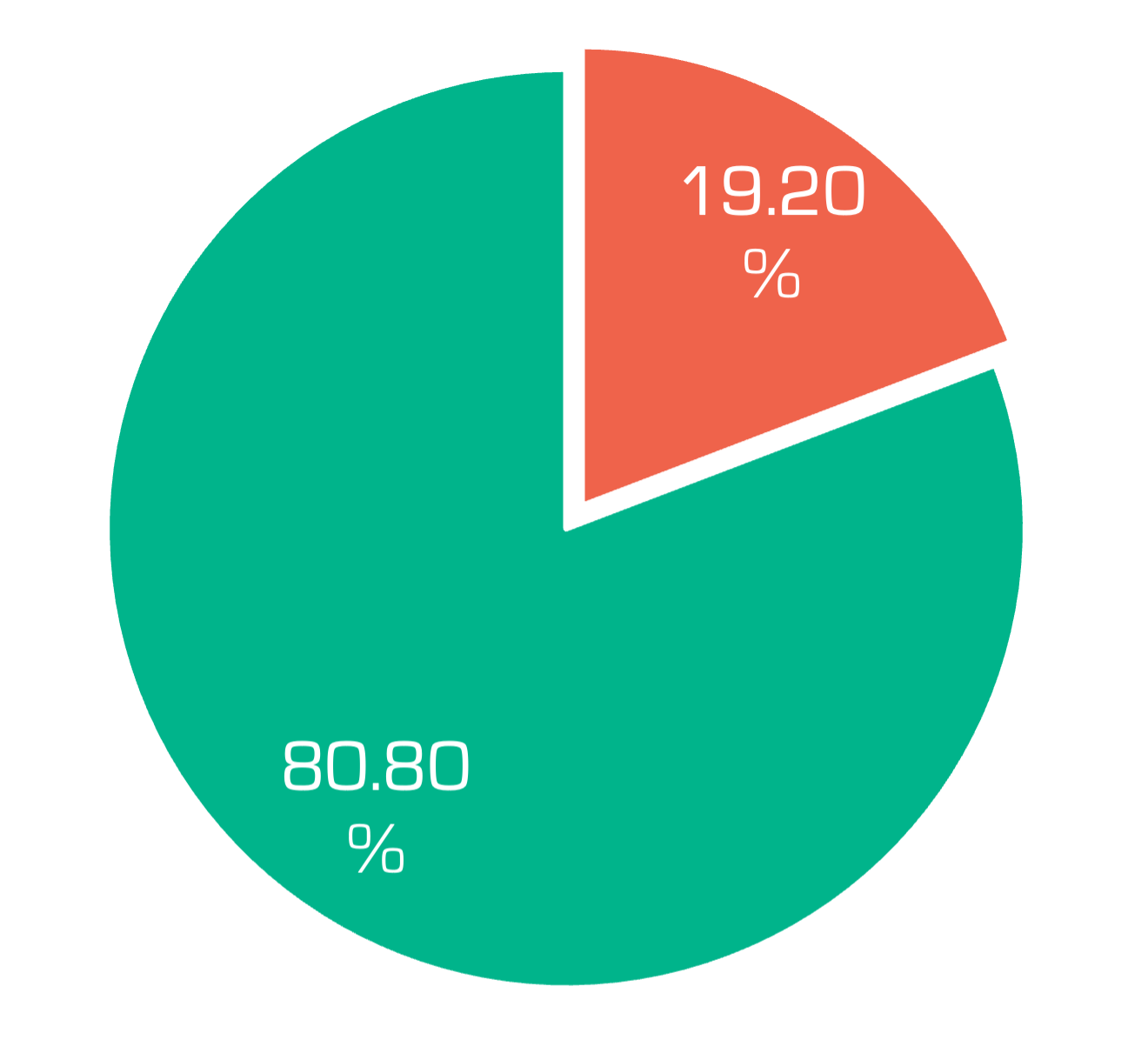

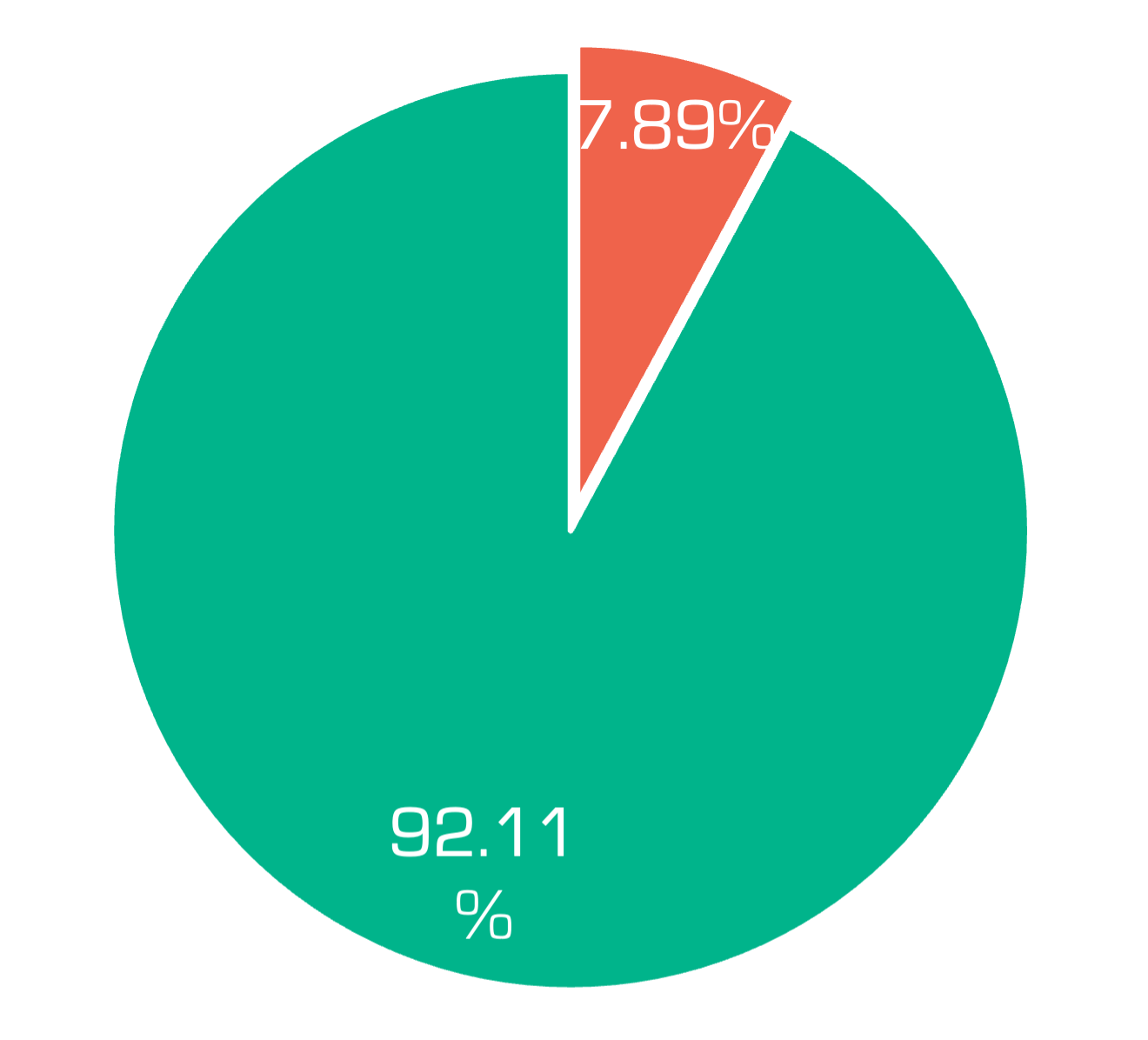

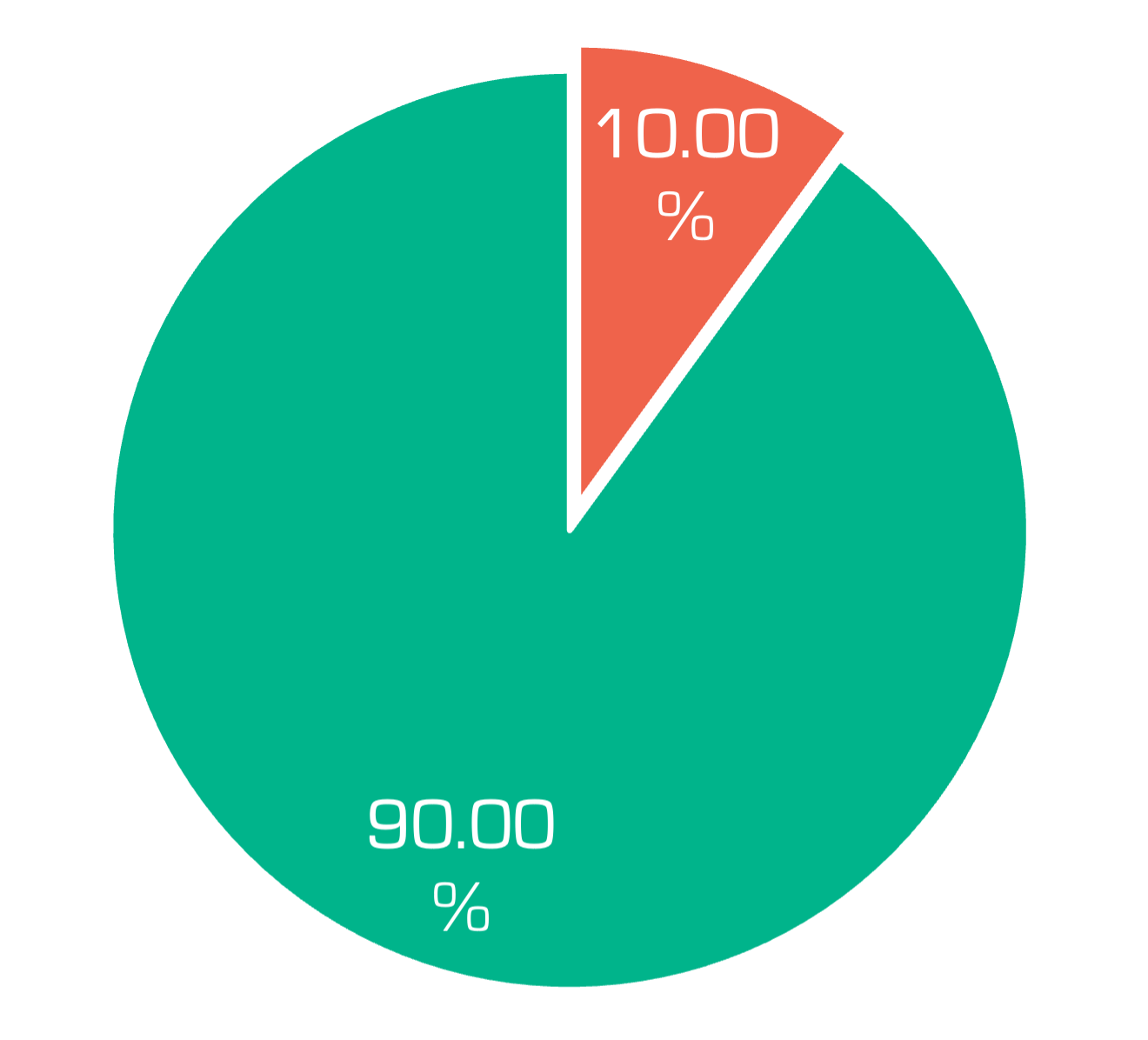

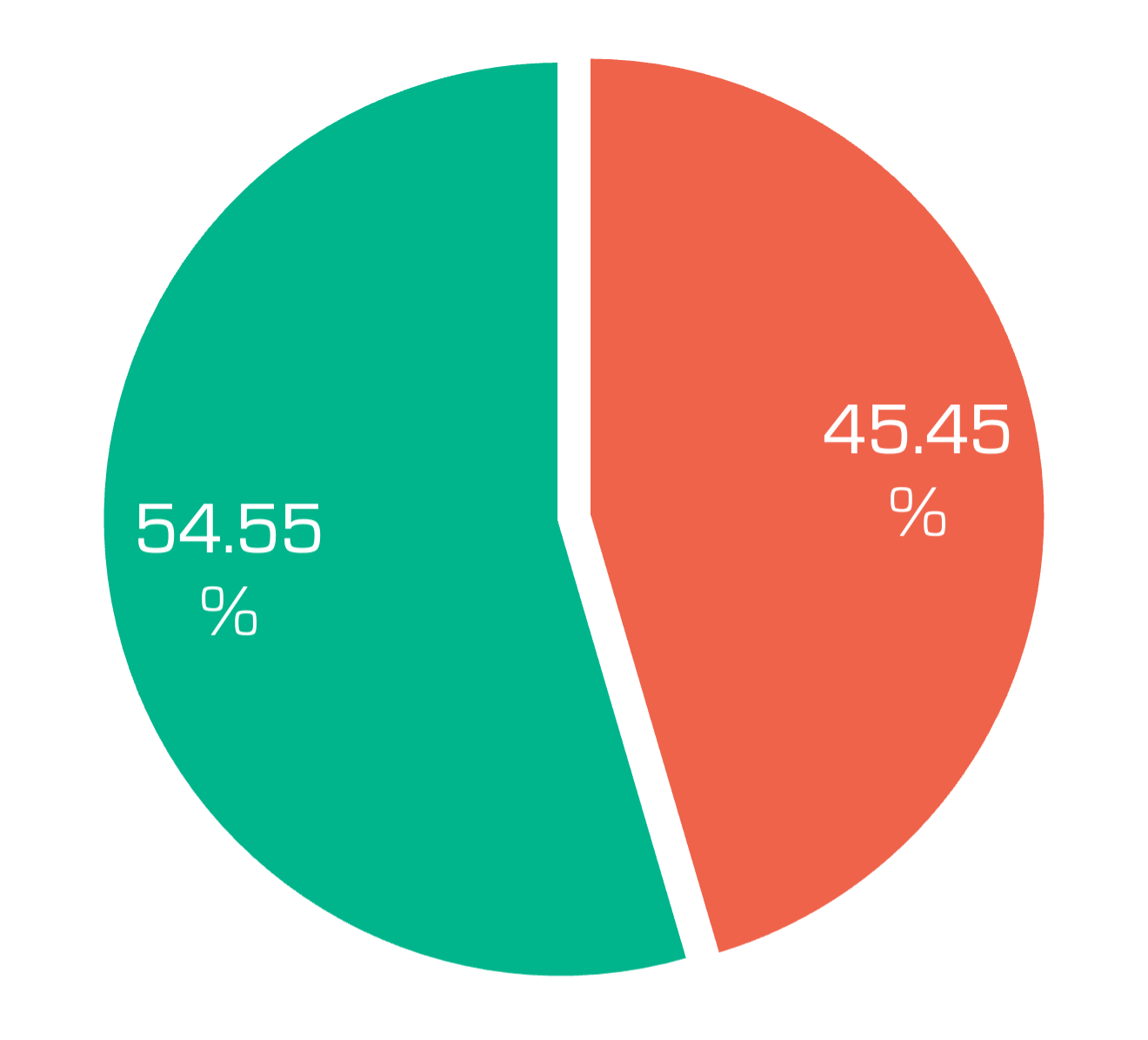

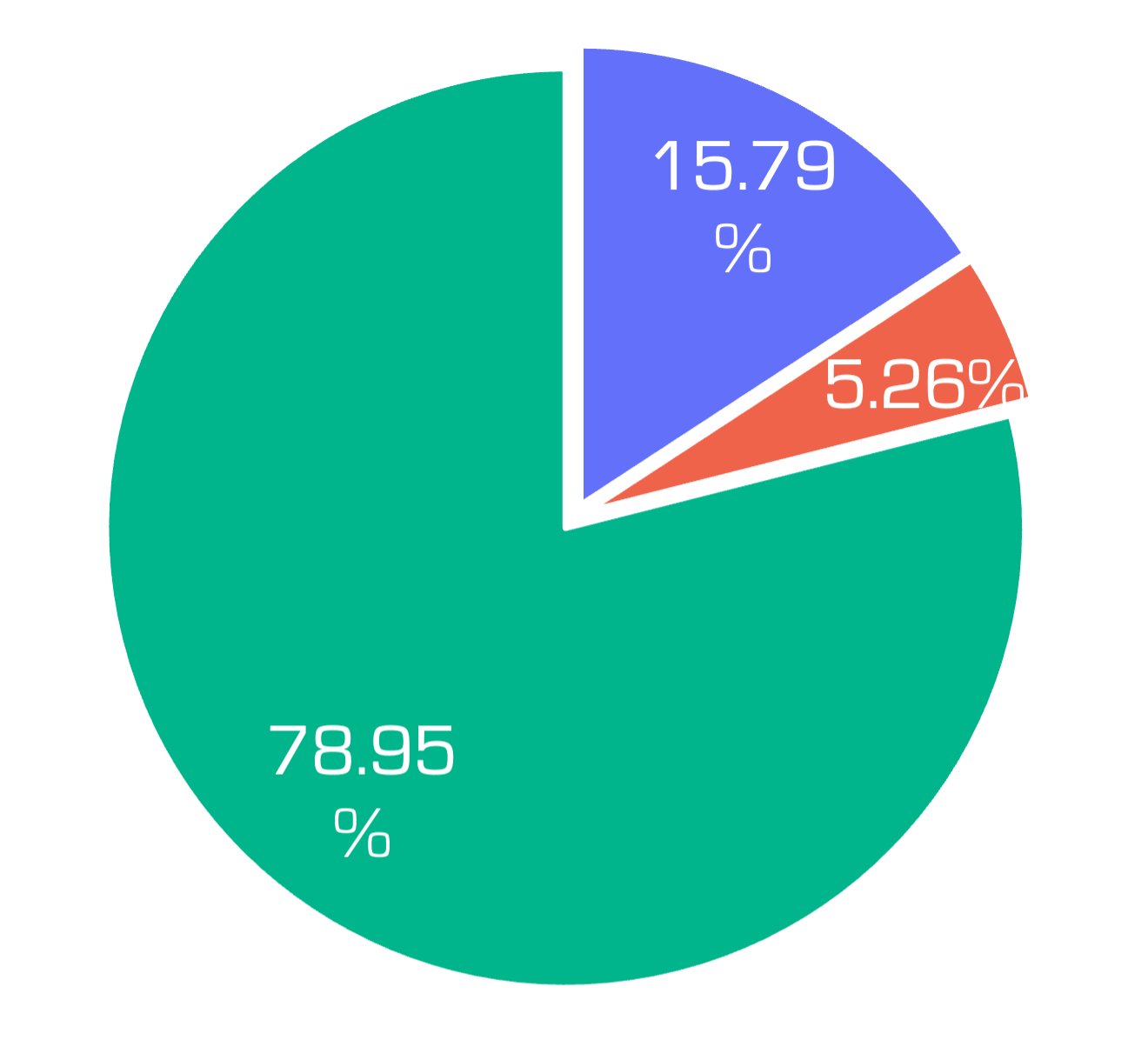

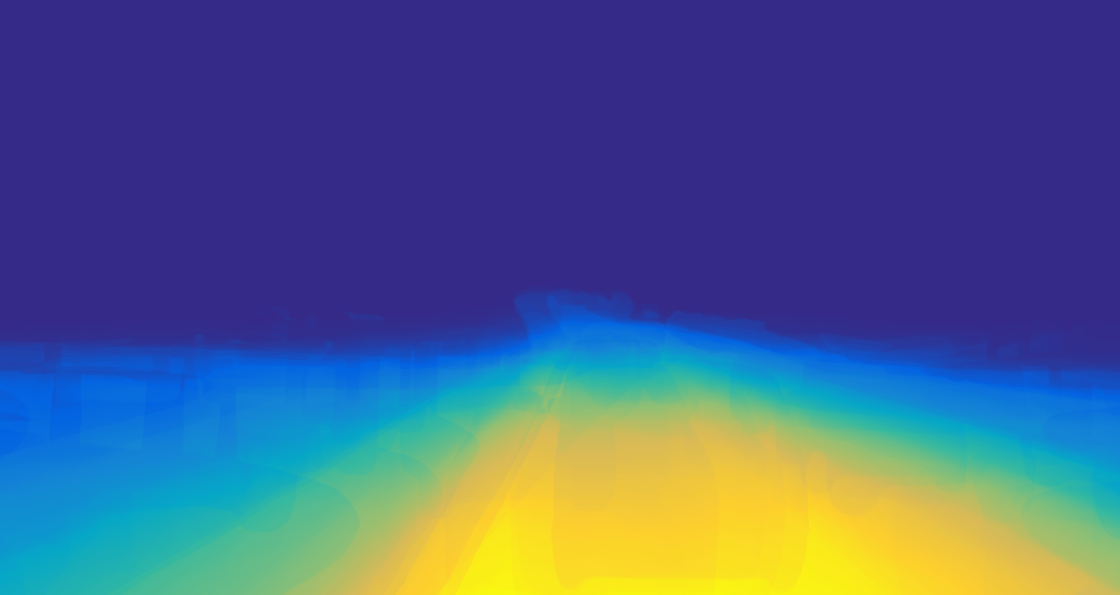

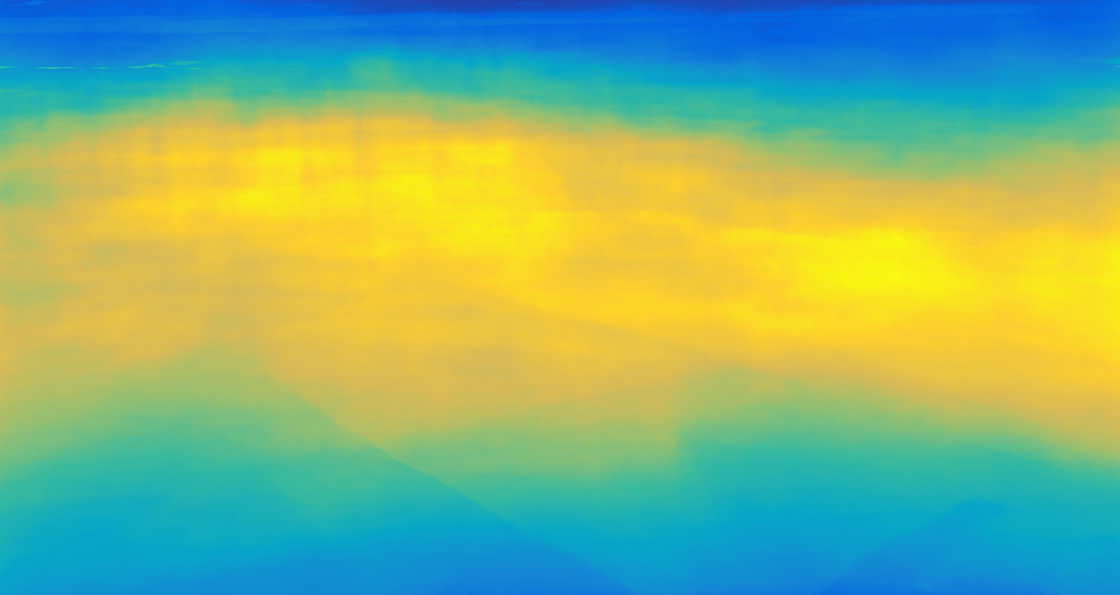

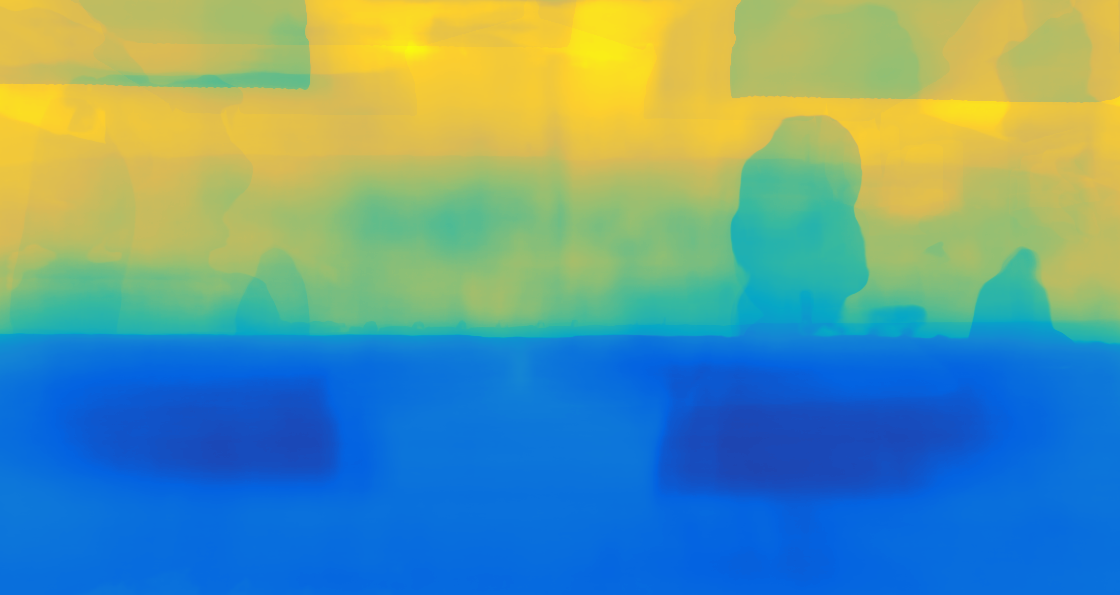

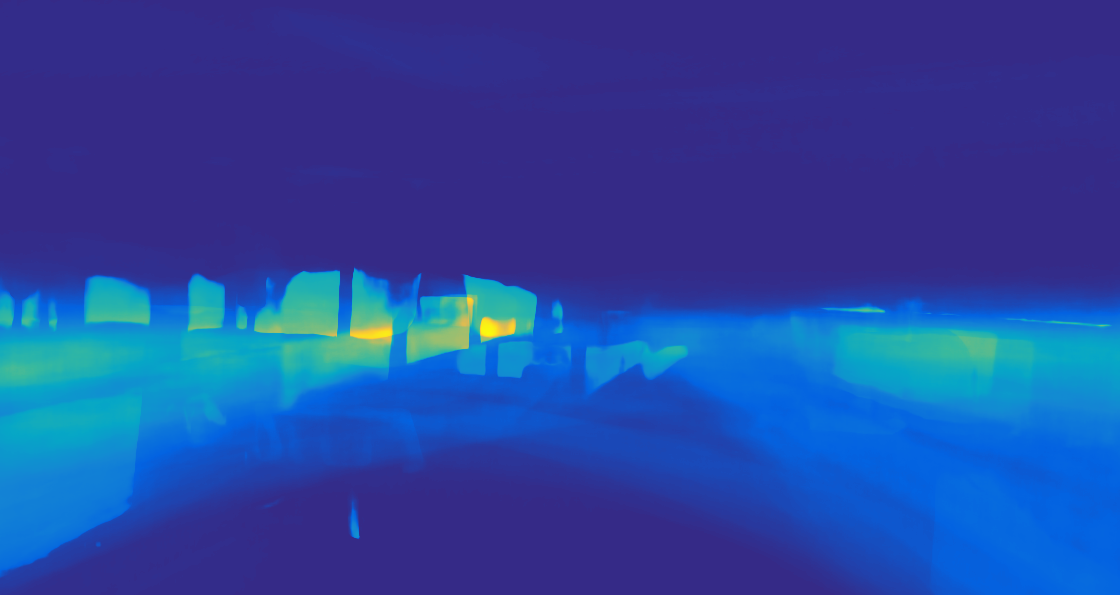

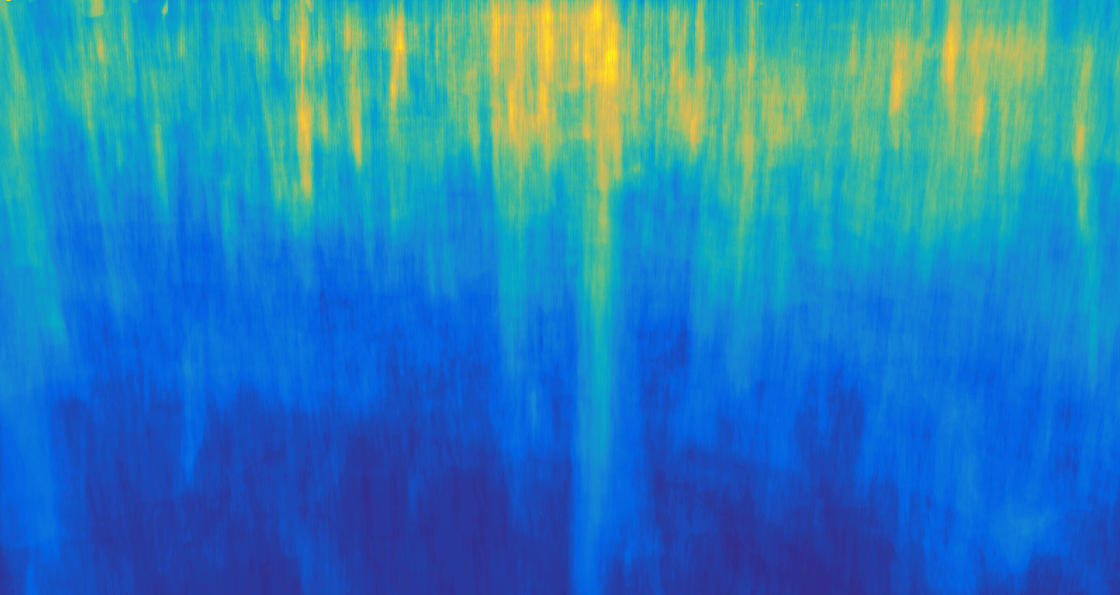

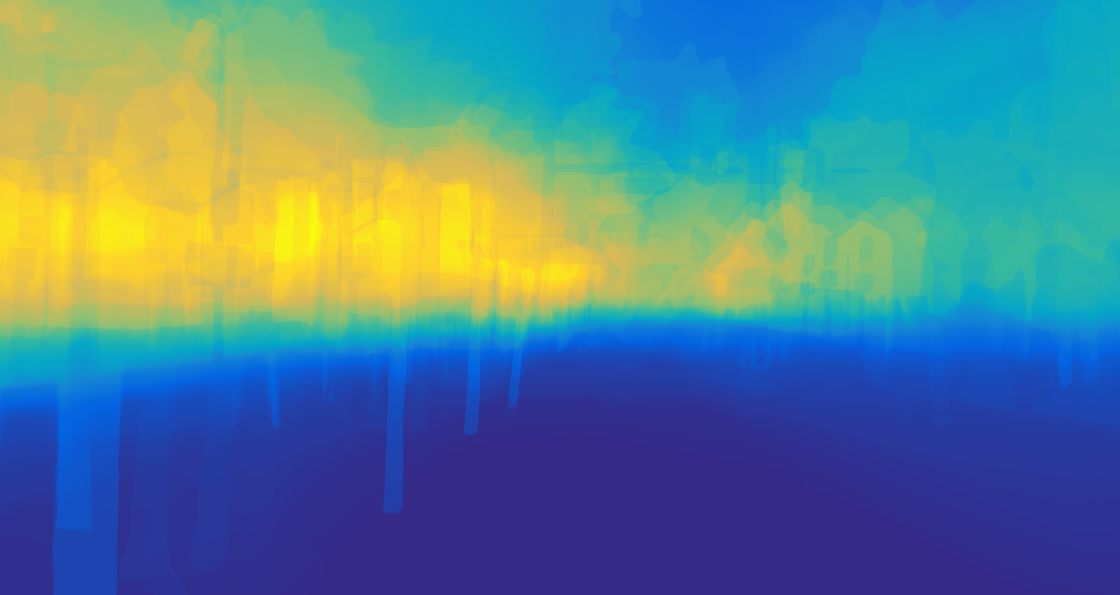

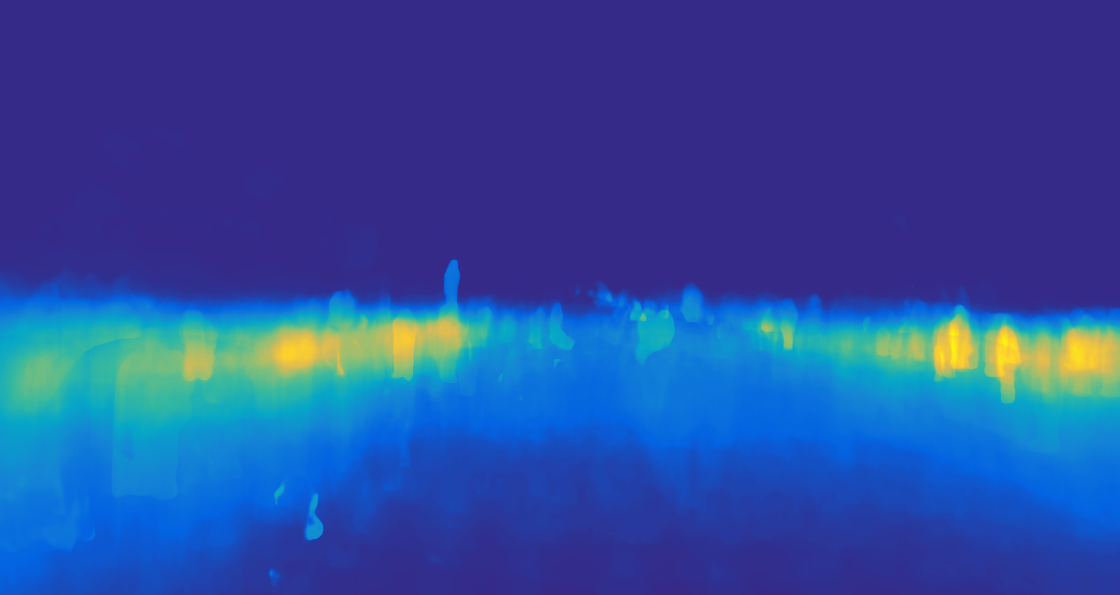

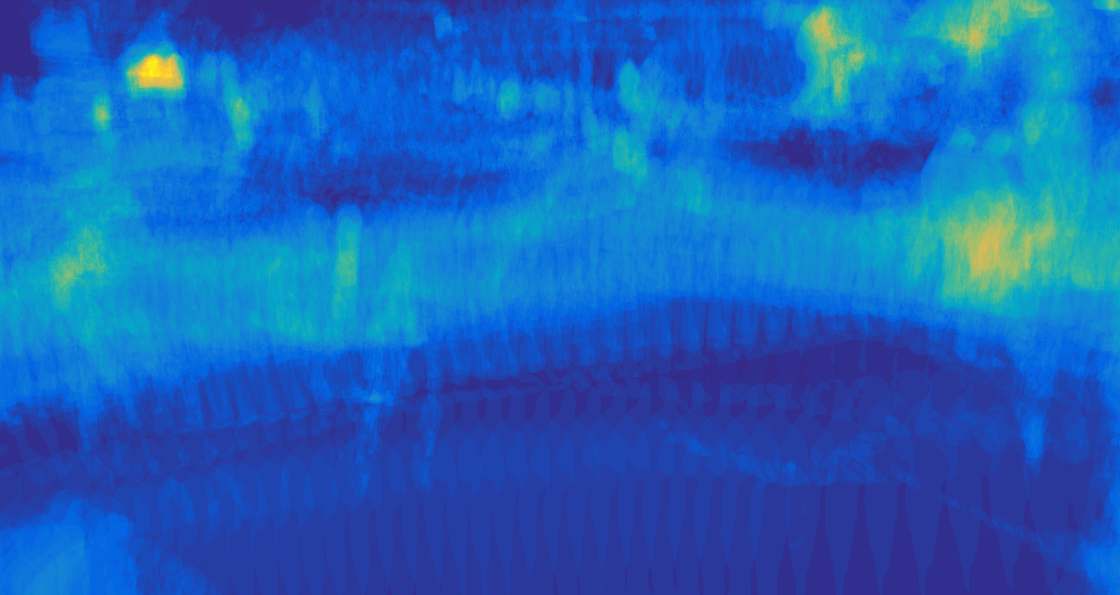

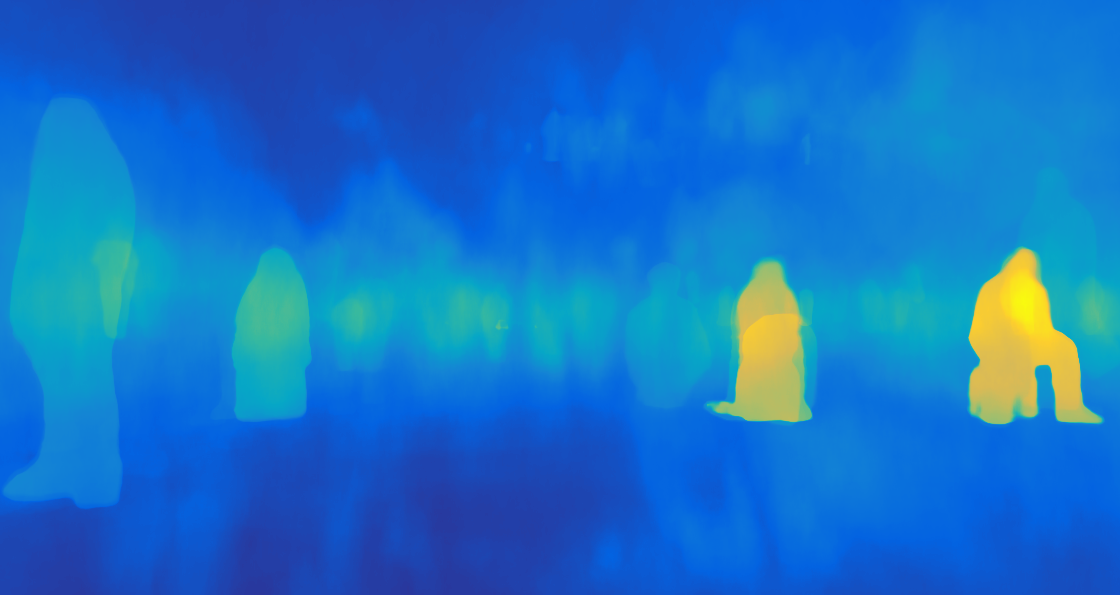

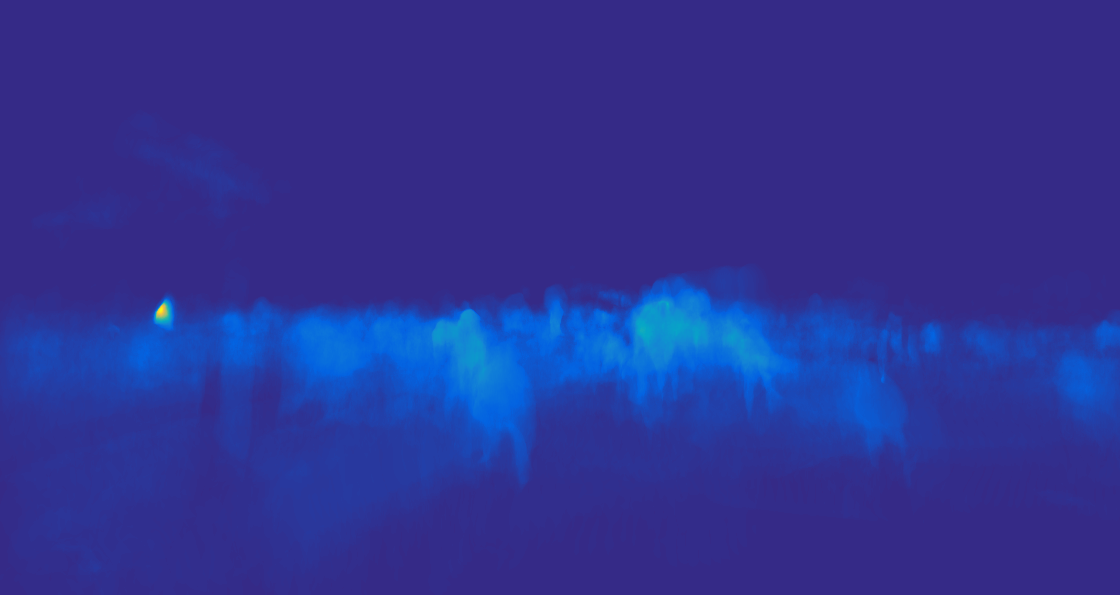

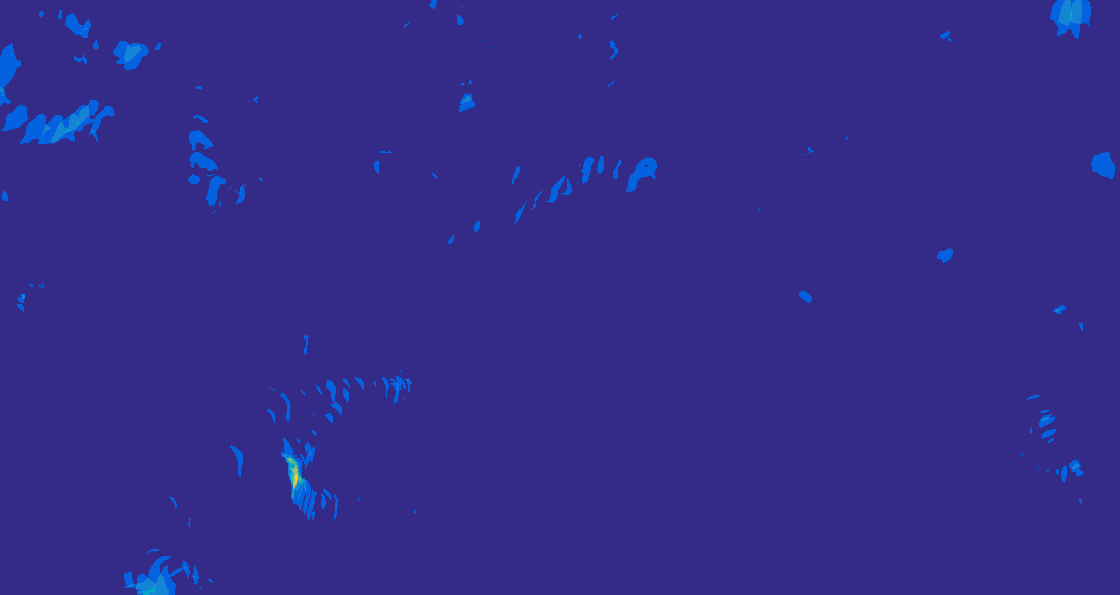

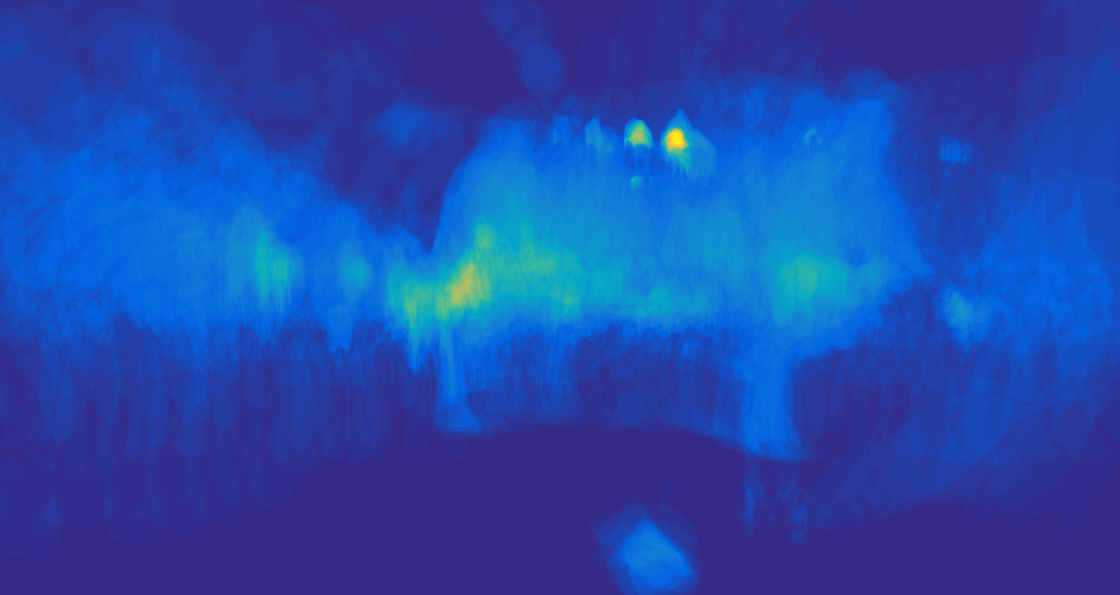

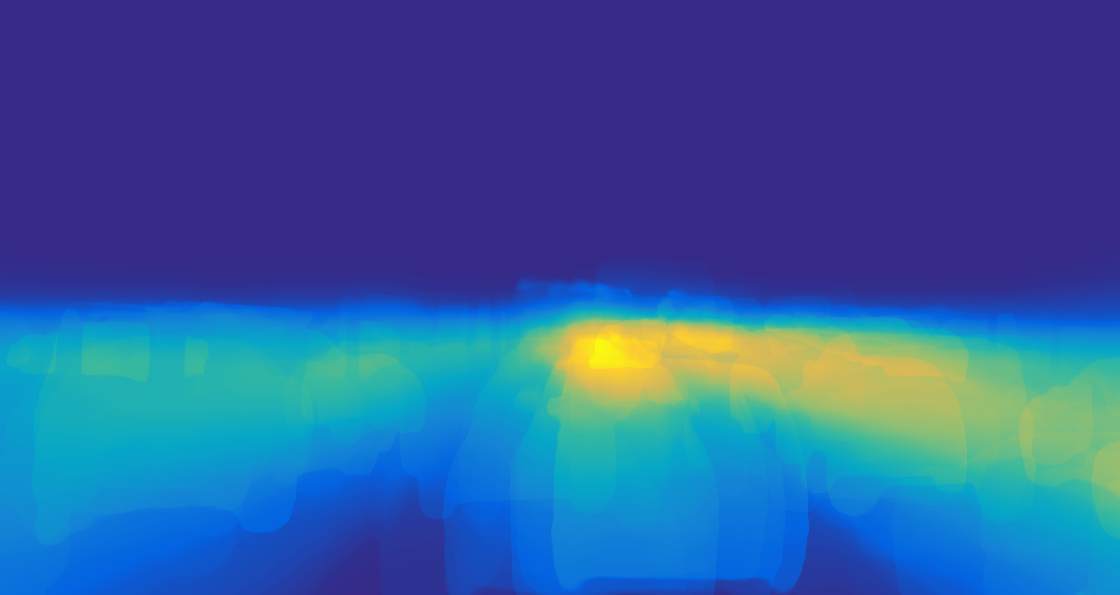

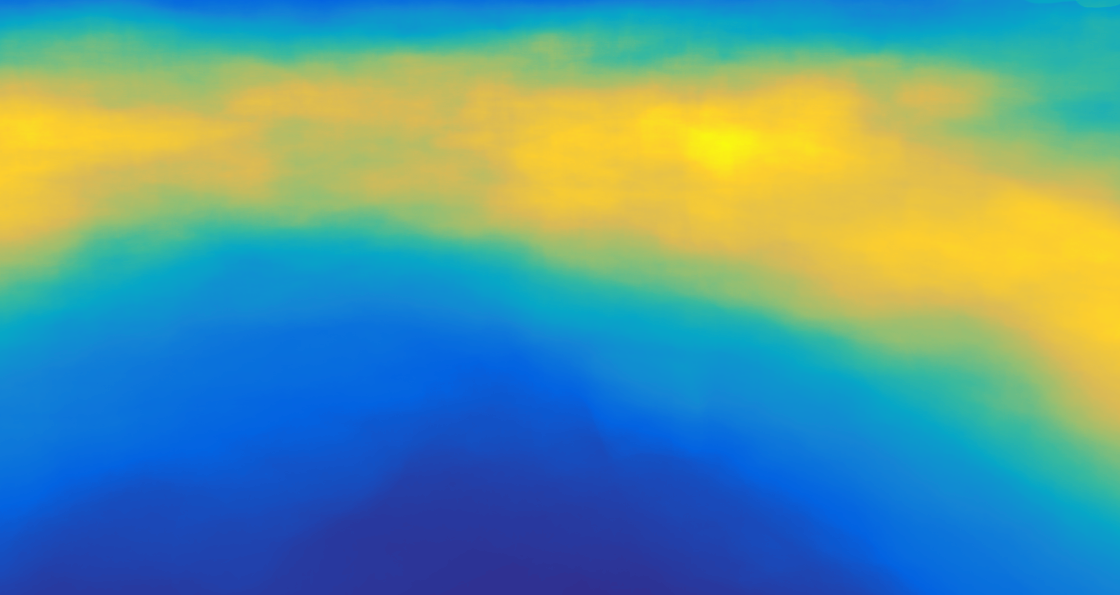

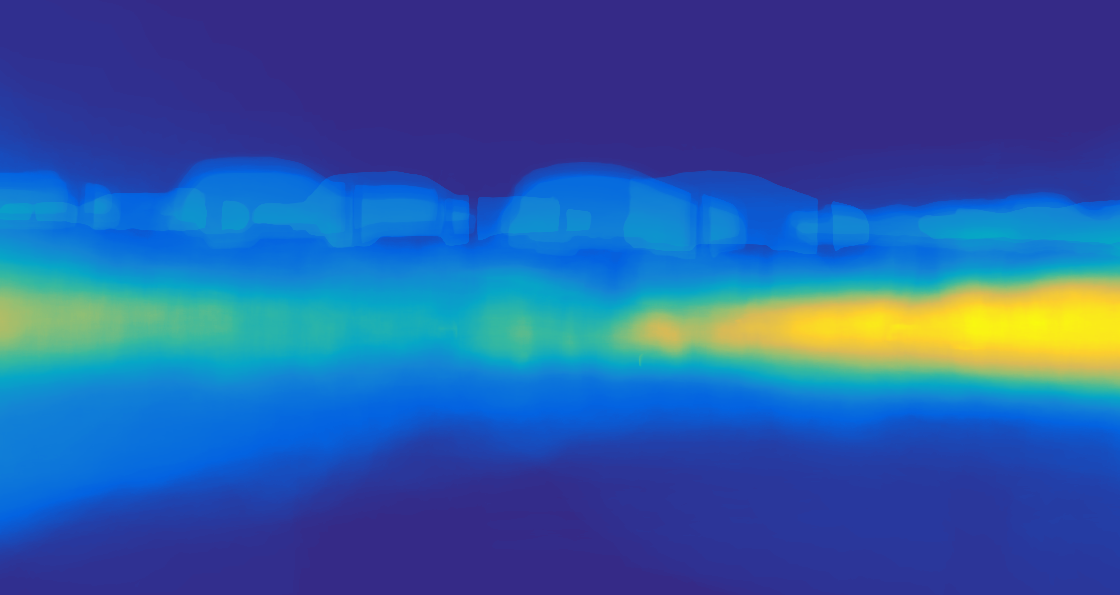

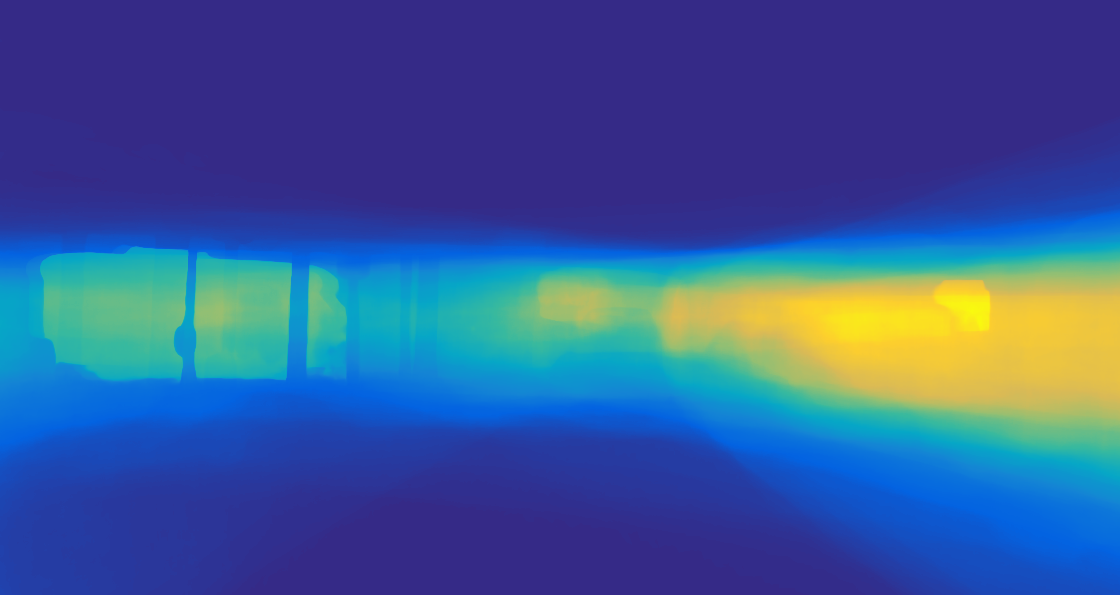

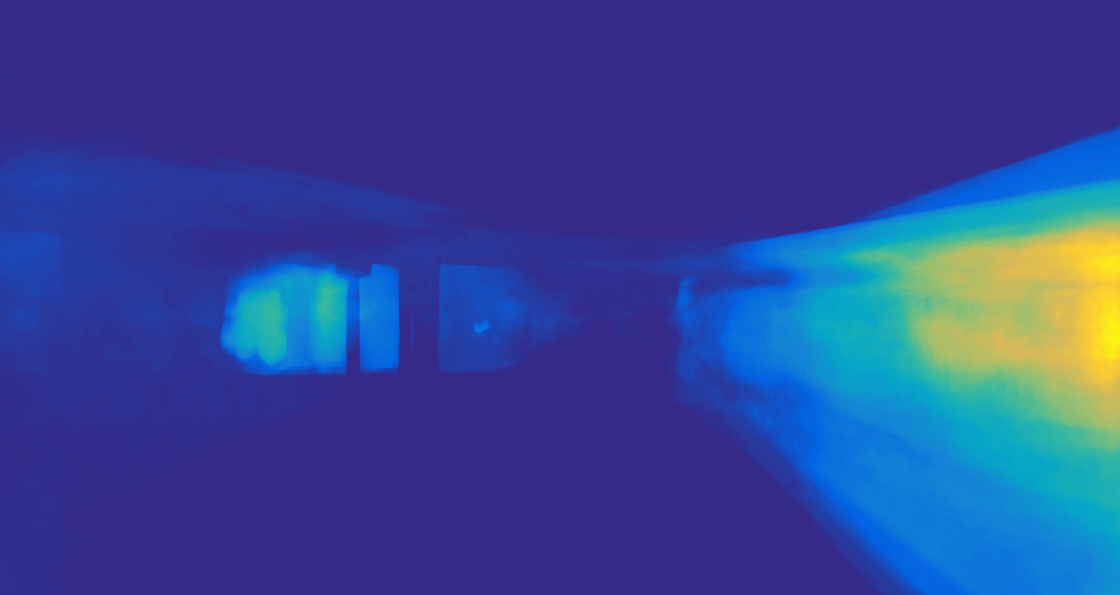

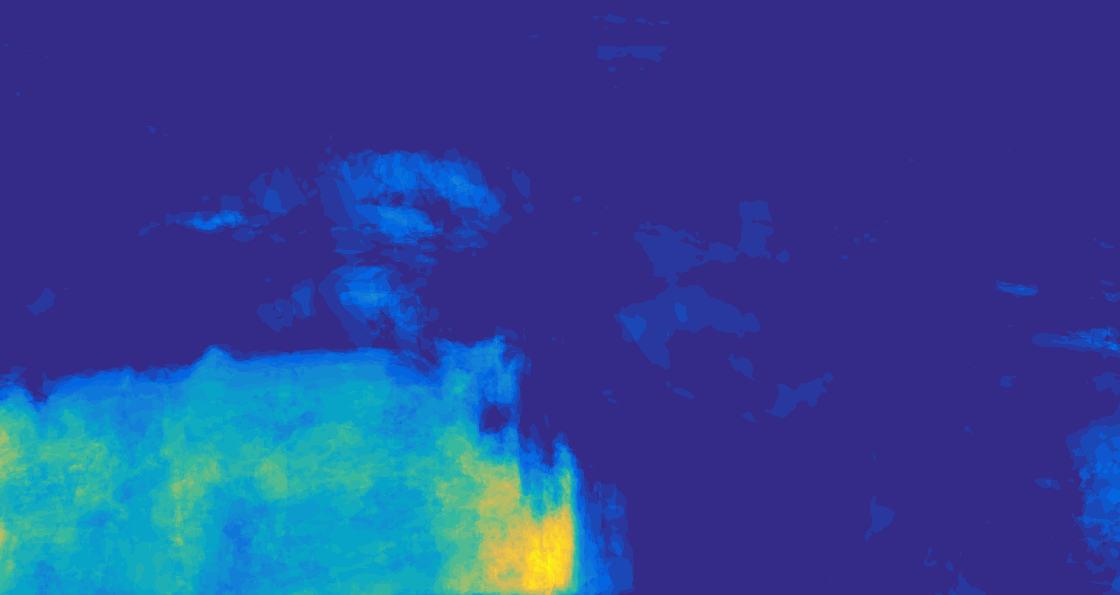

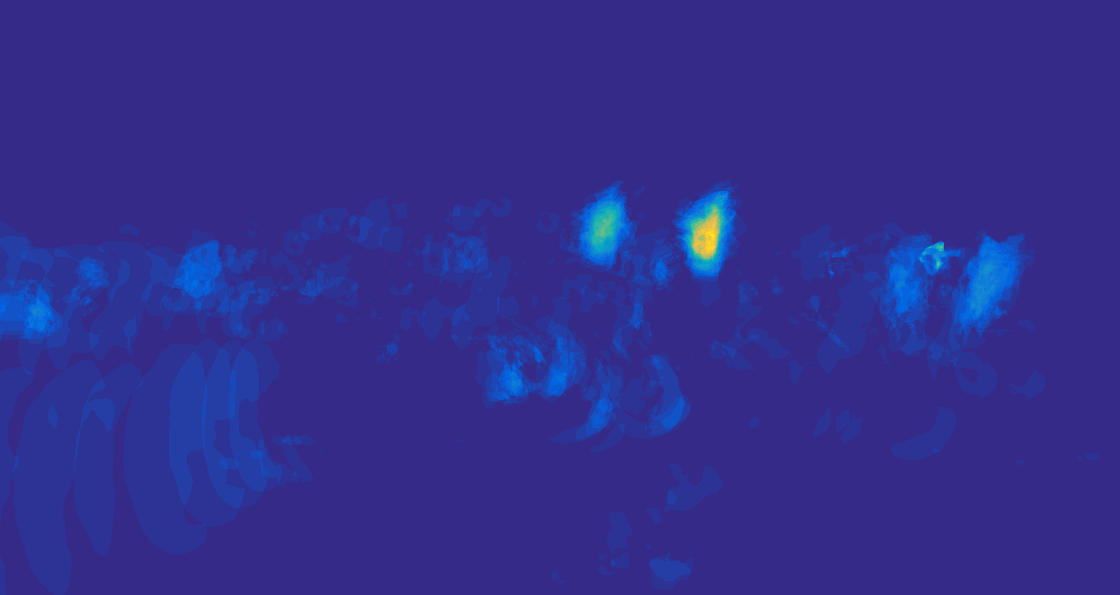

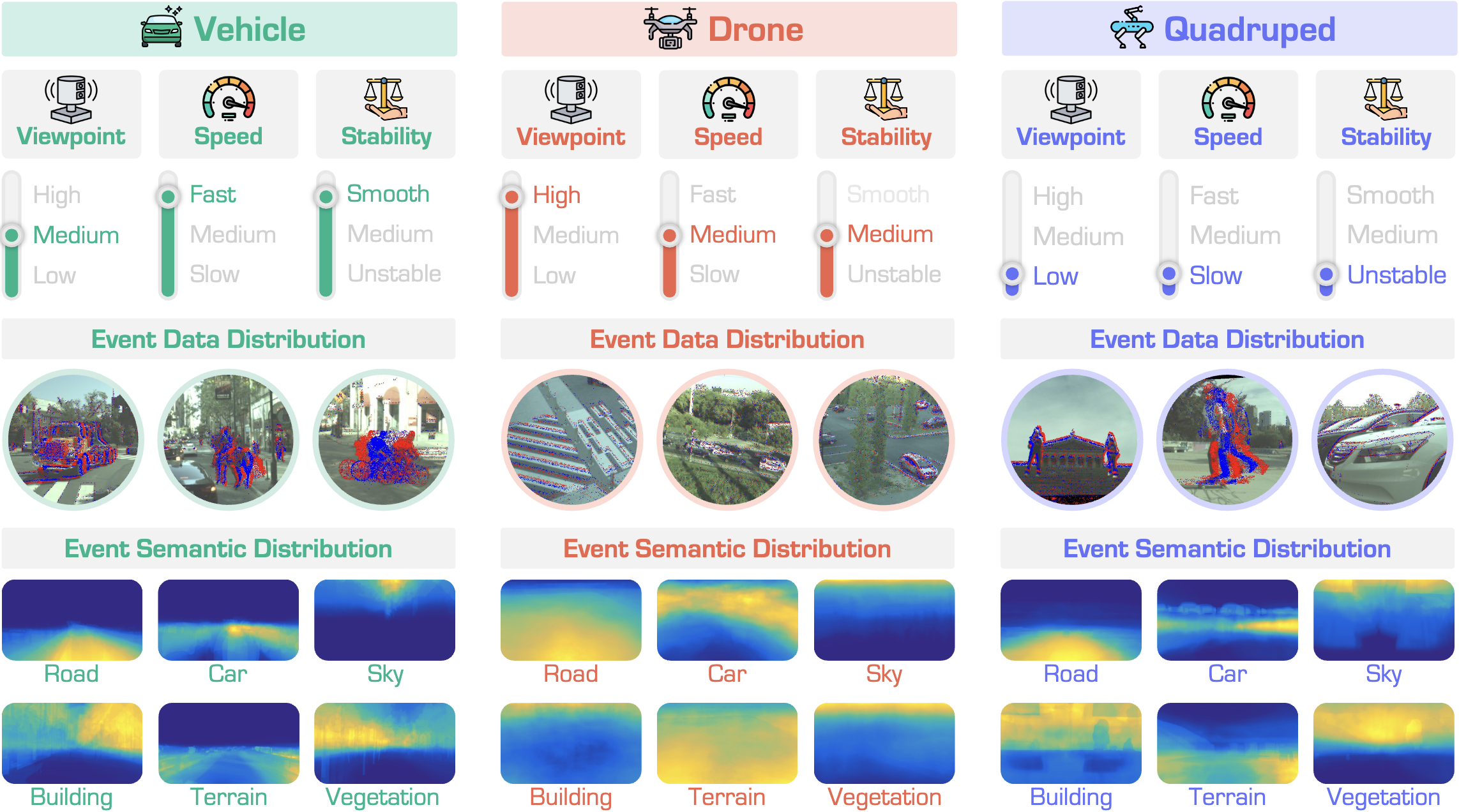

Vehicle (Pv),

Vehicle (Pv),

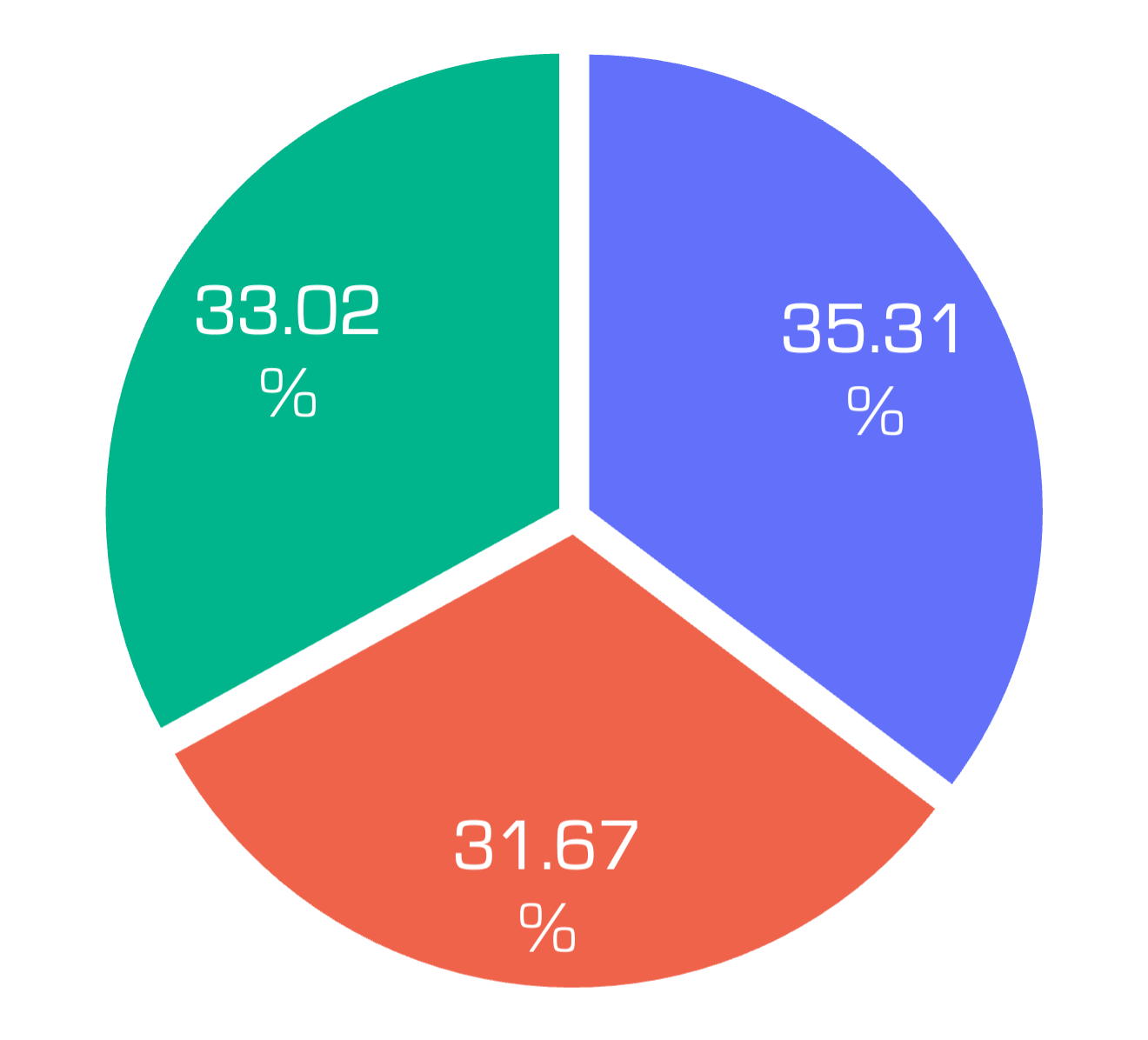

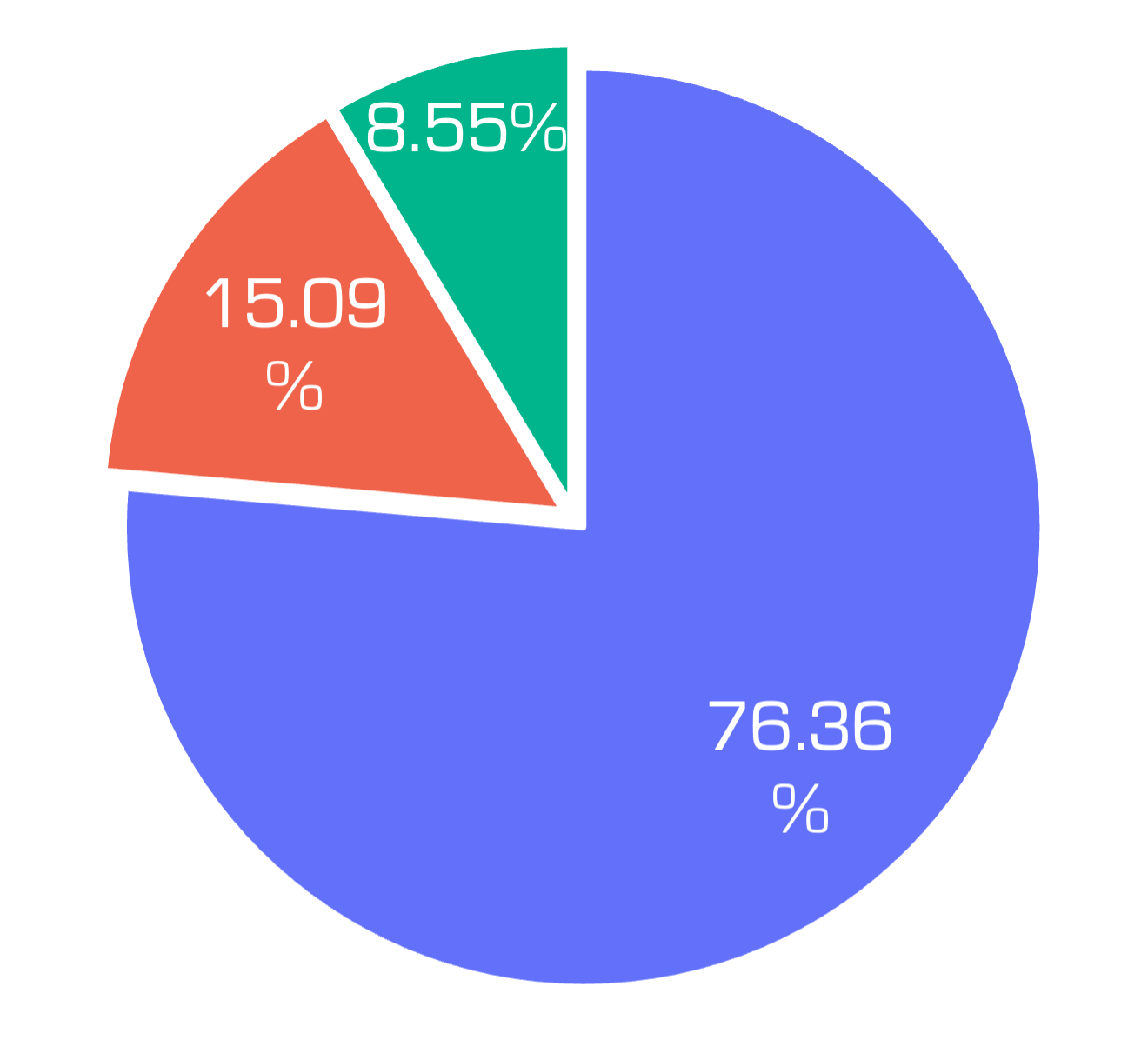

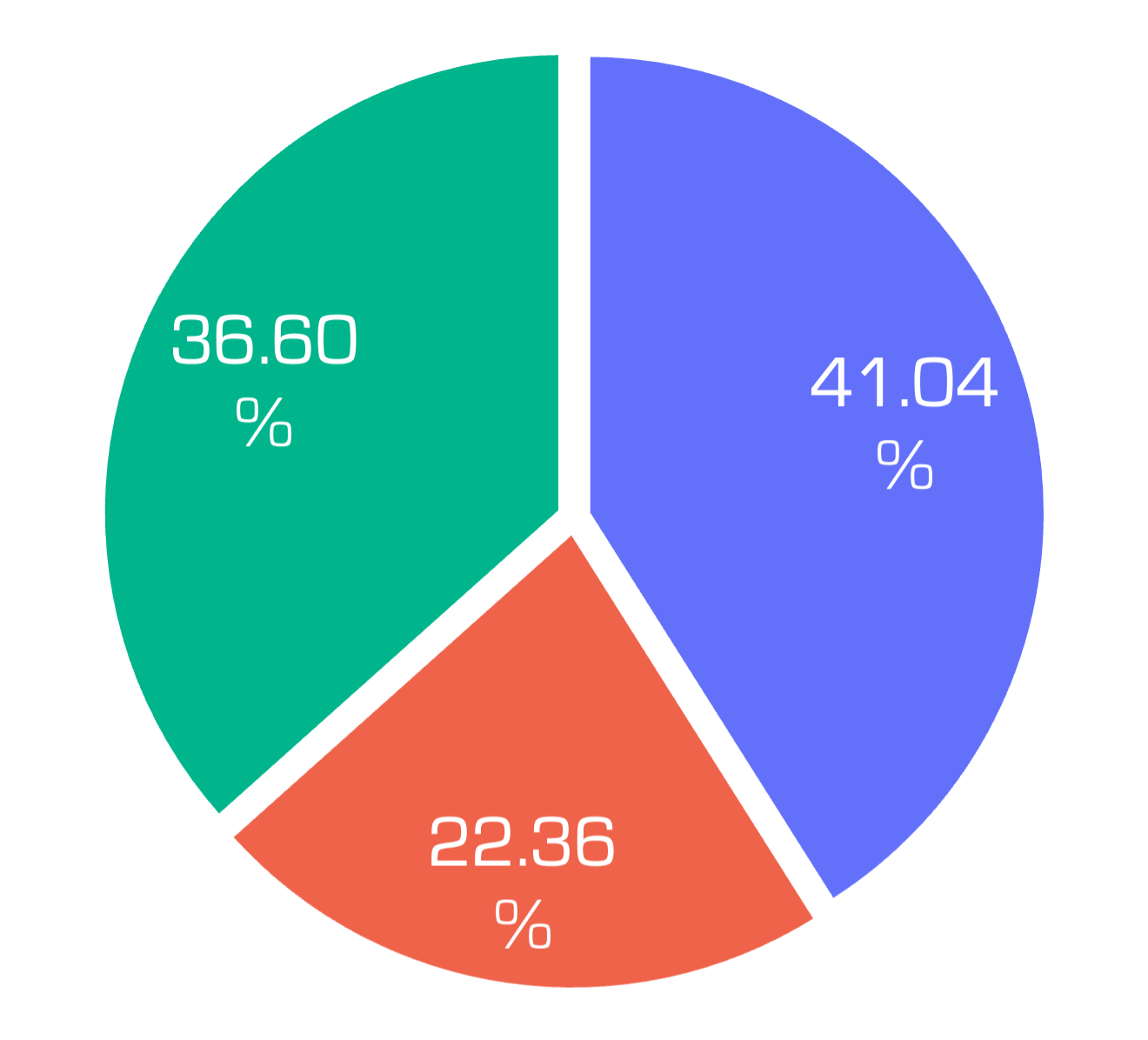

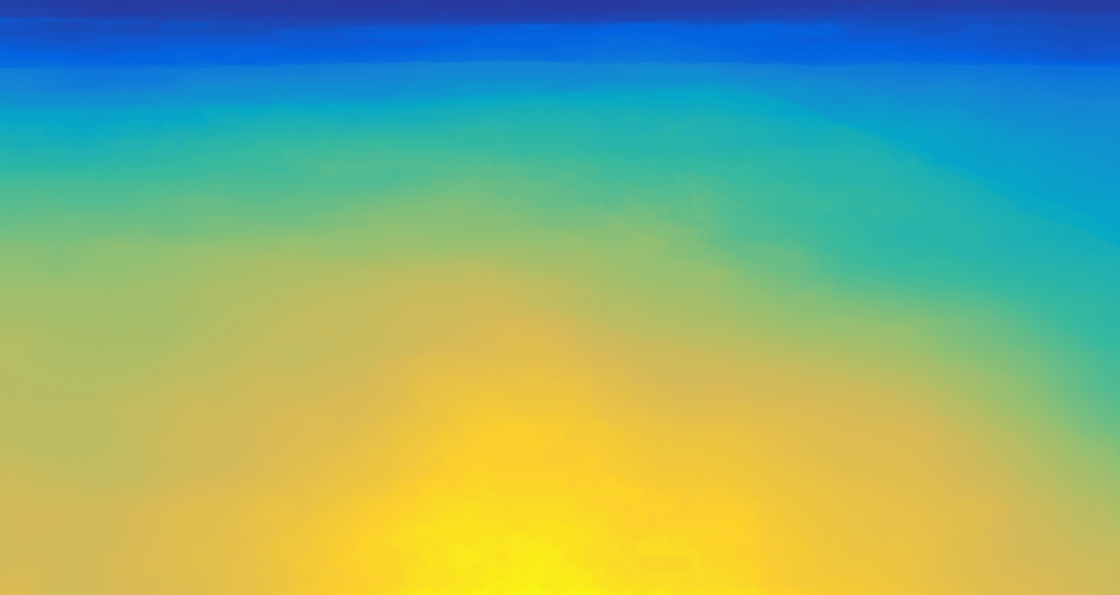

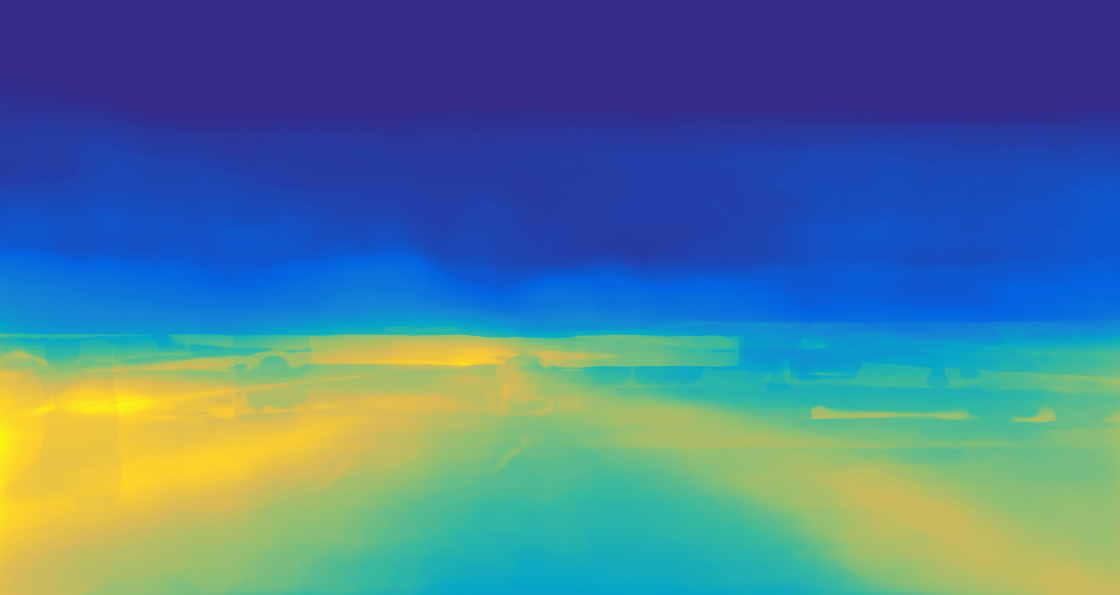

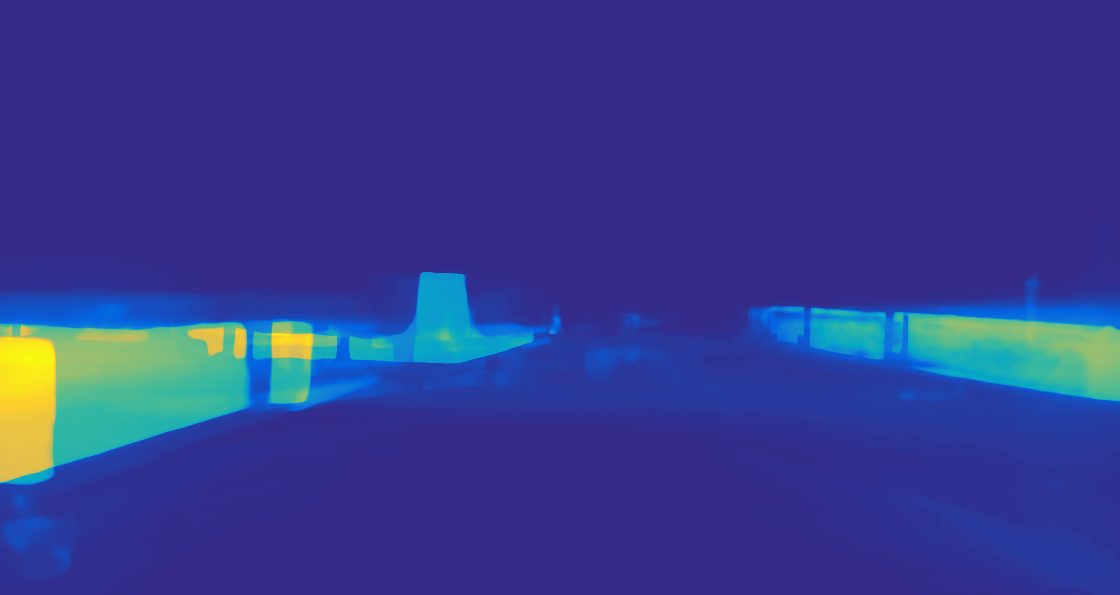

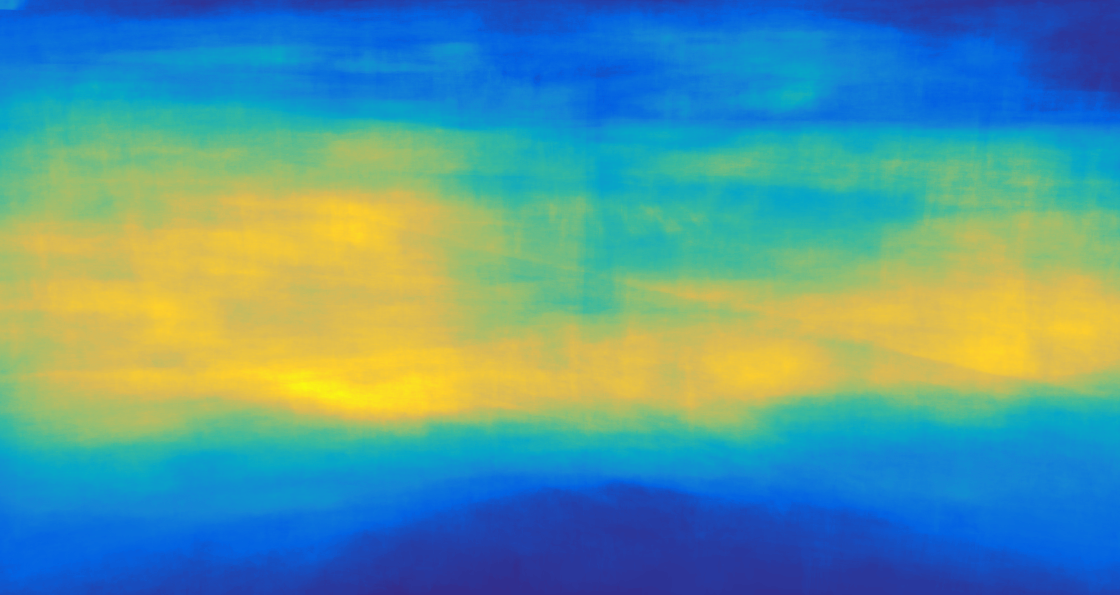

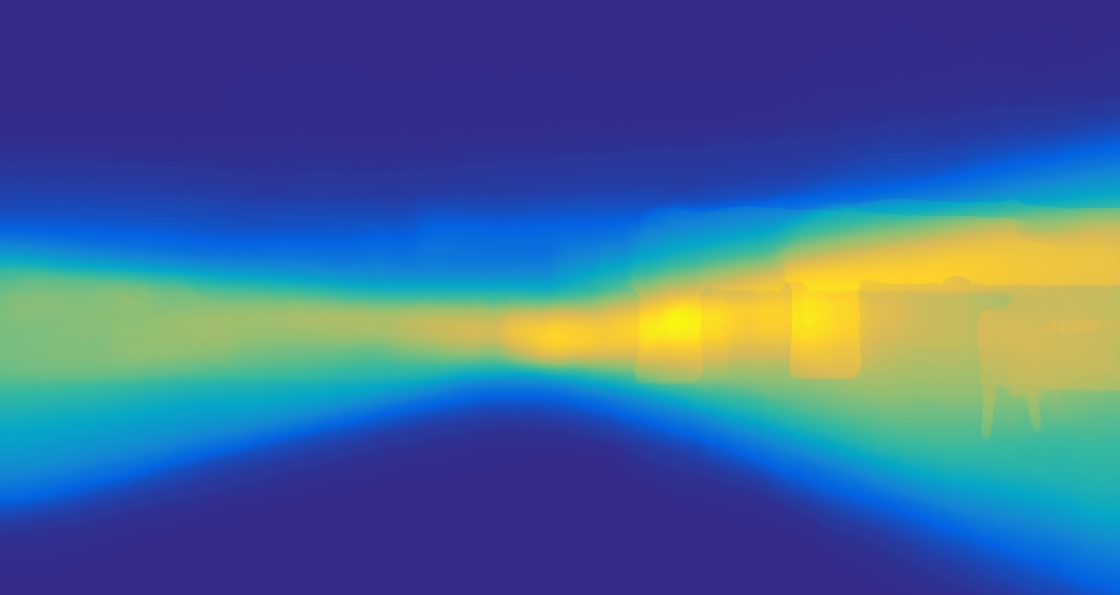

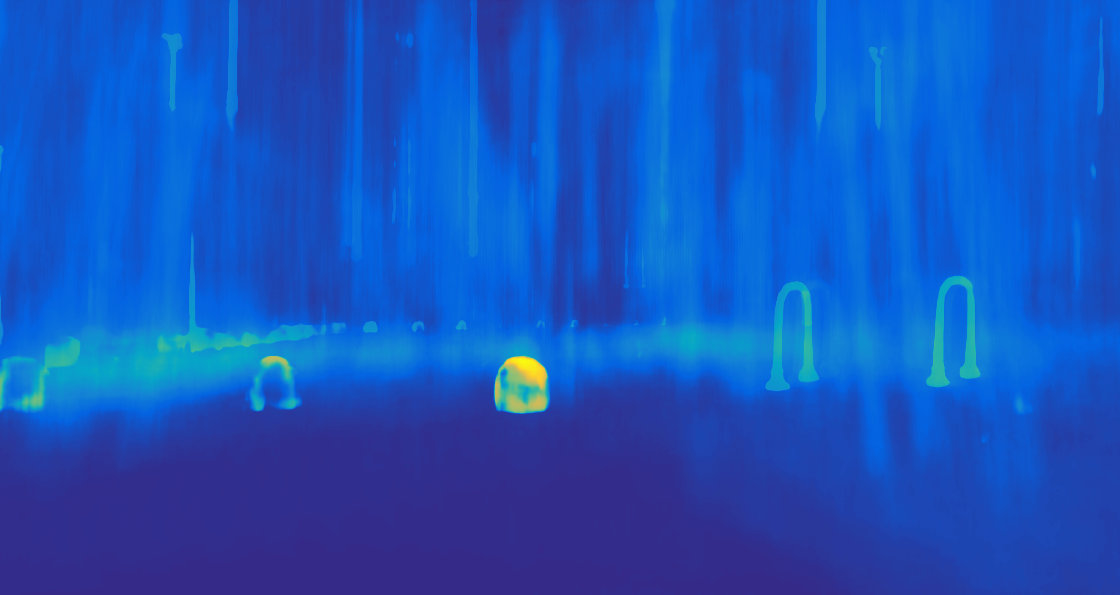

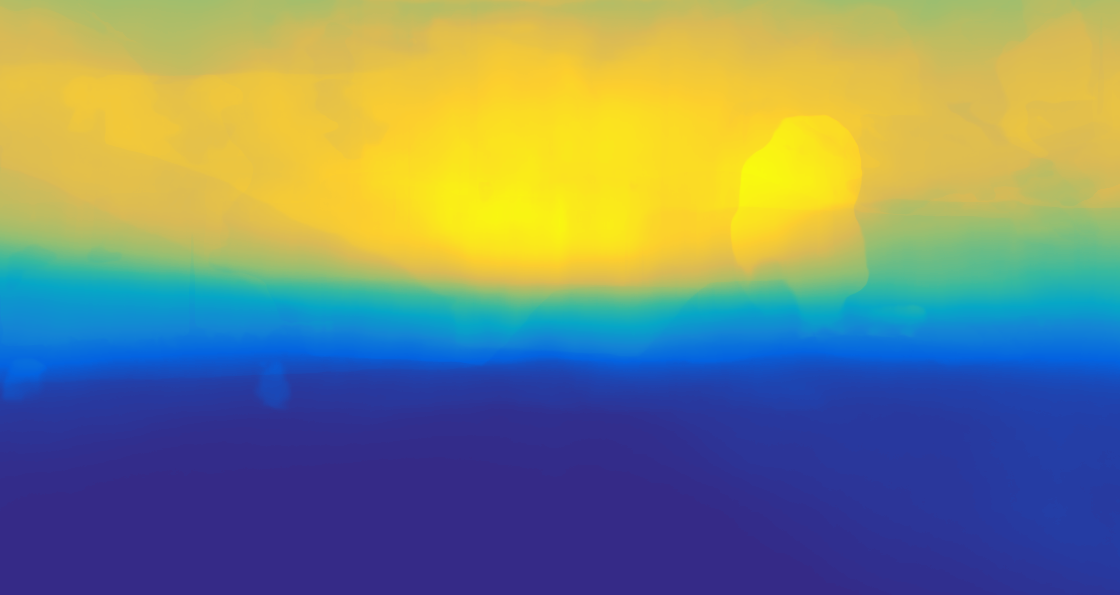

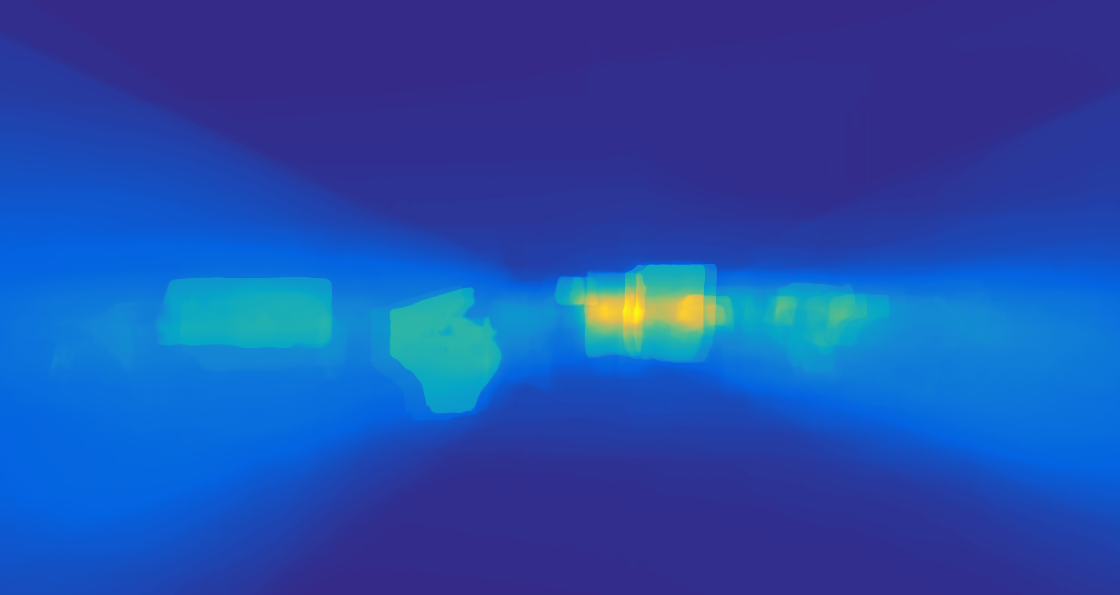

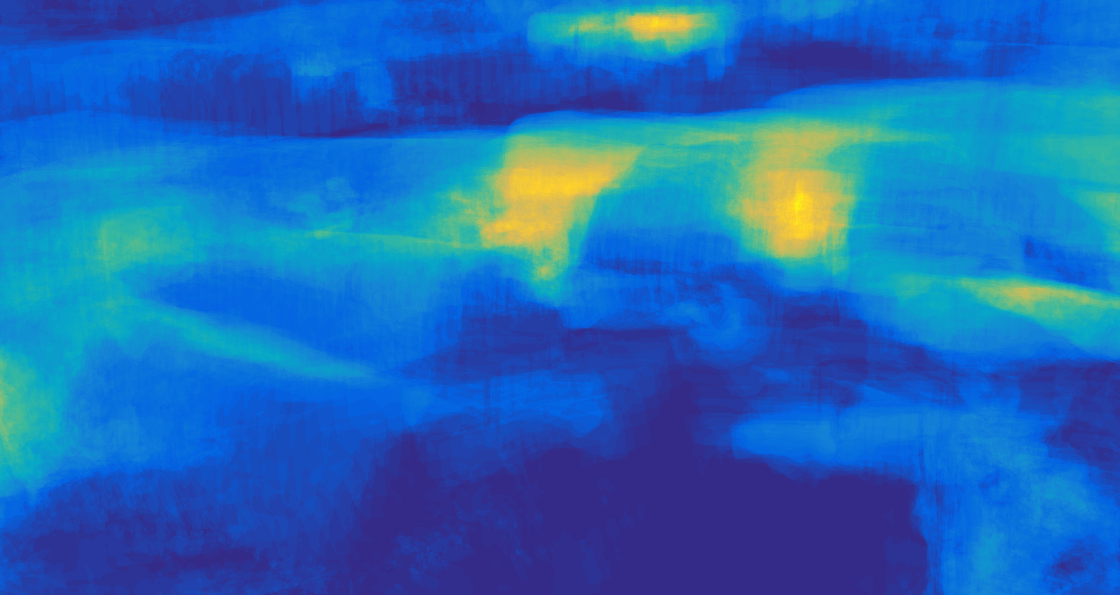

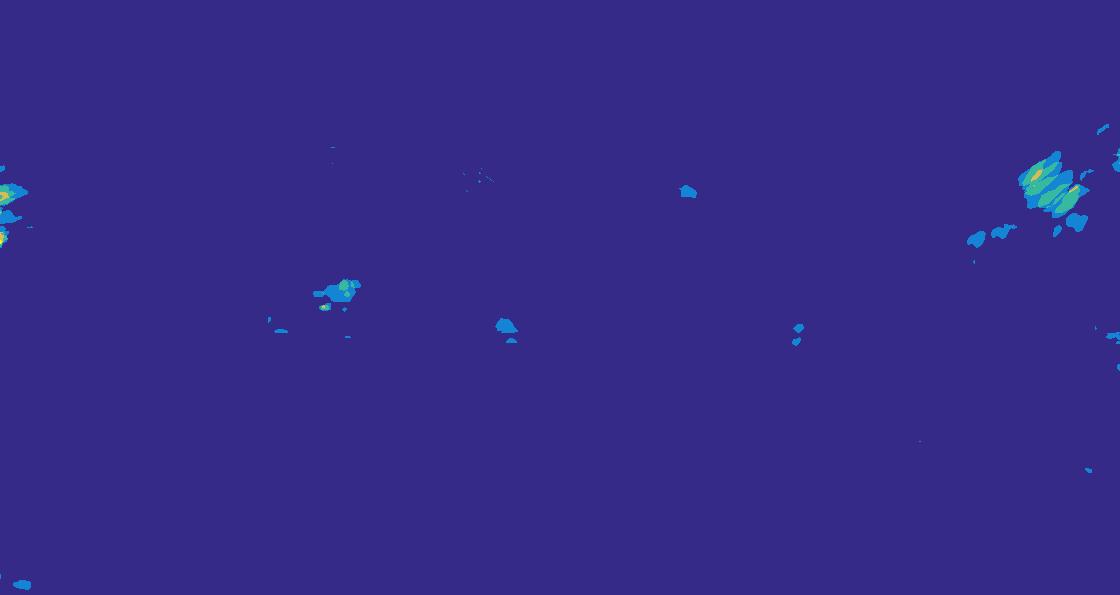

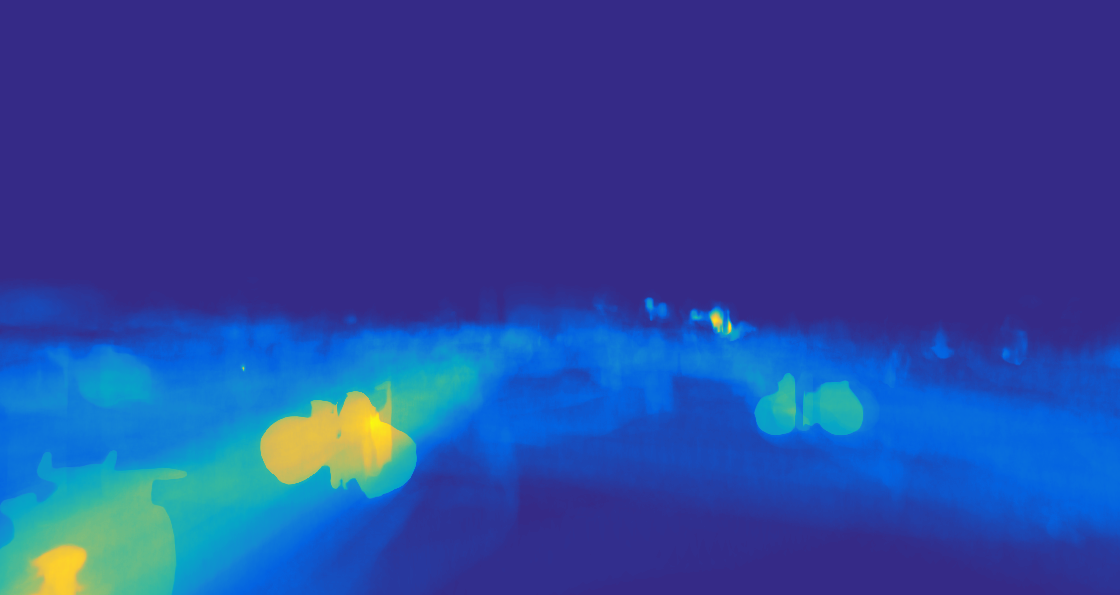

Drone (Pd), and

Drone (Pd), and

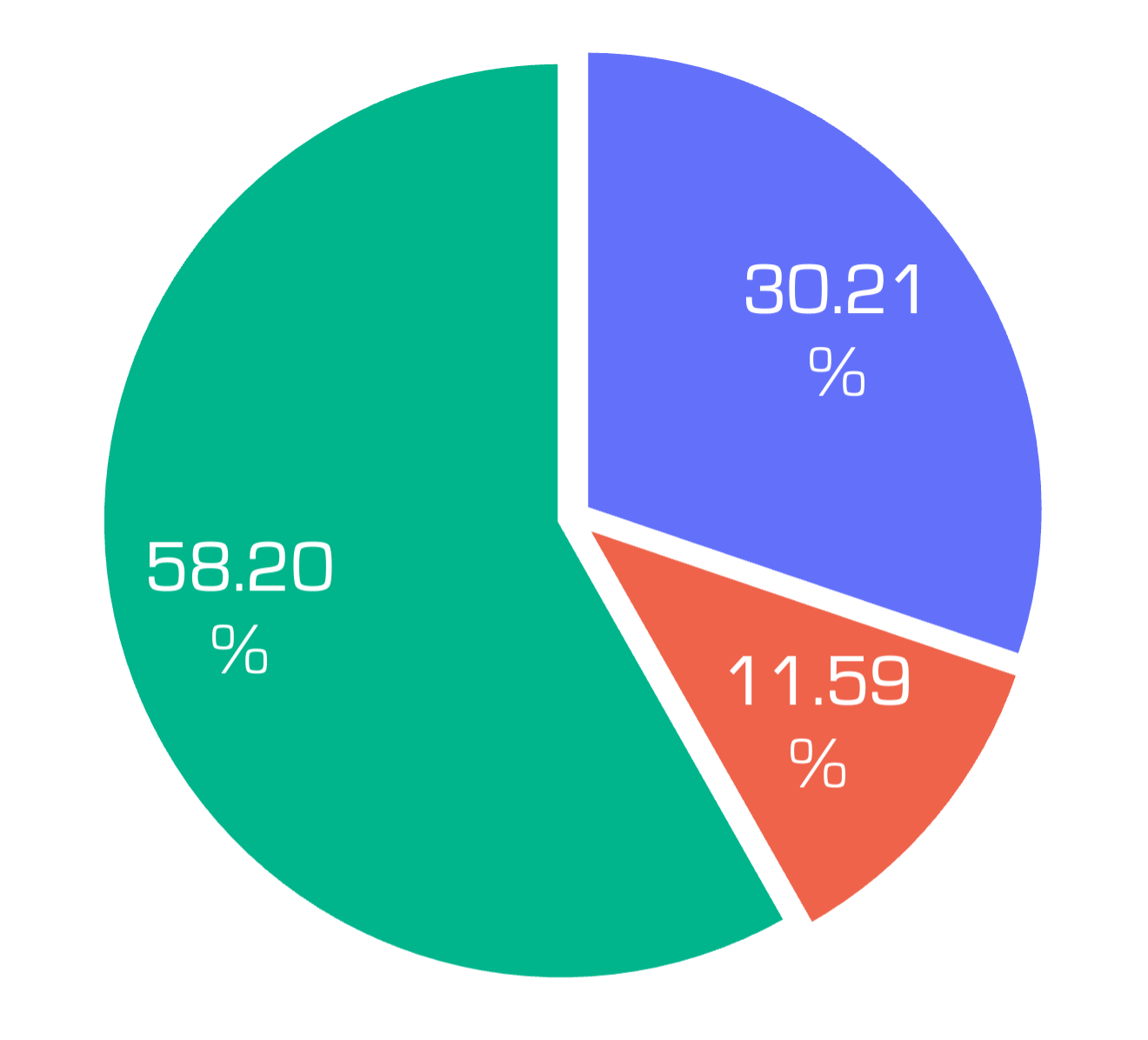

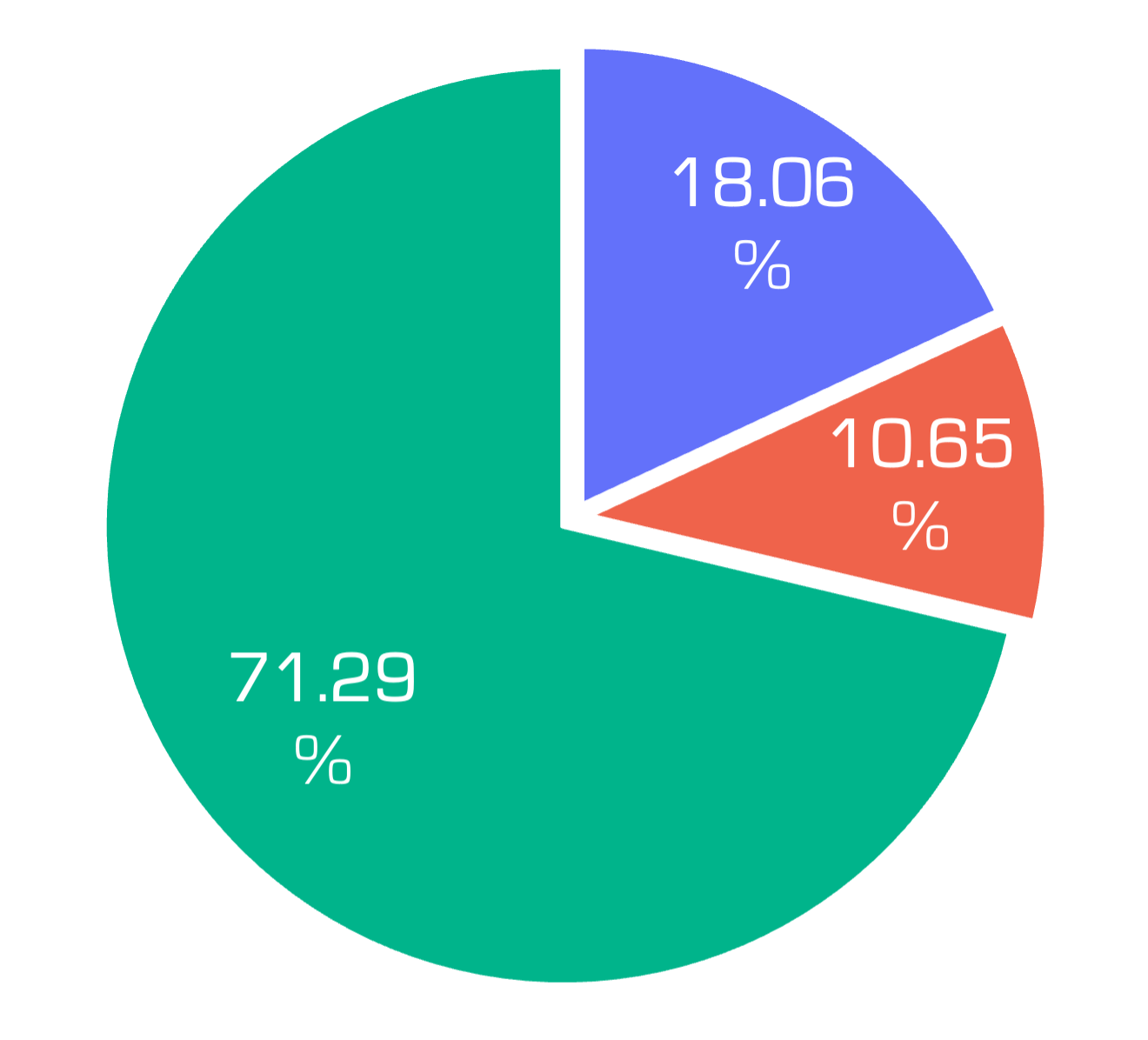

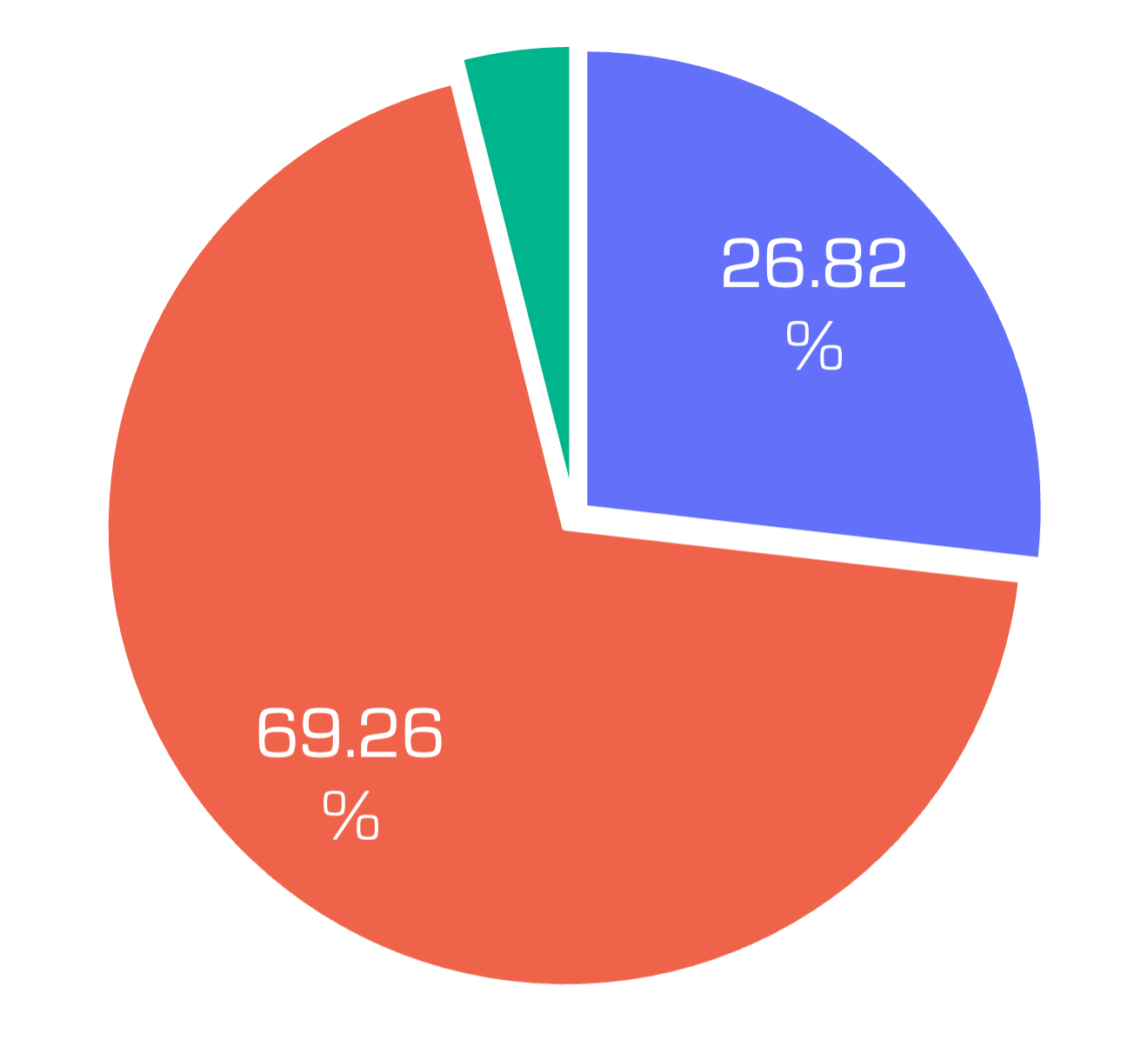

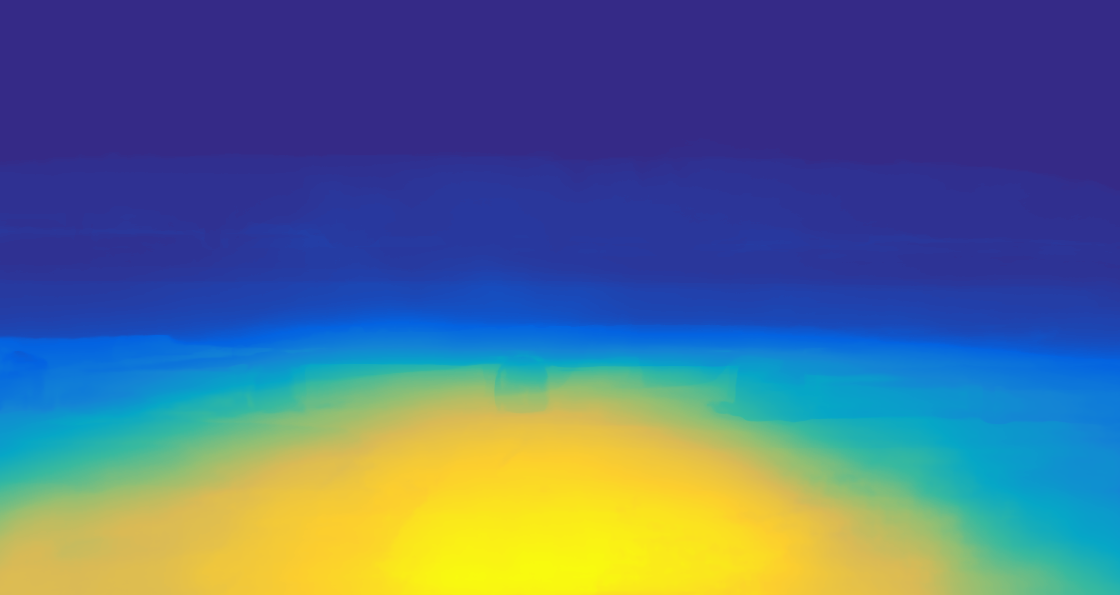

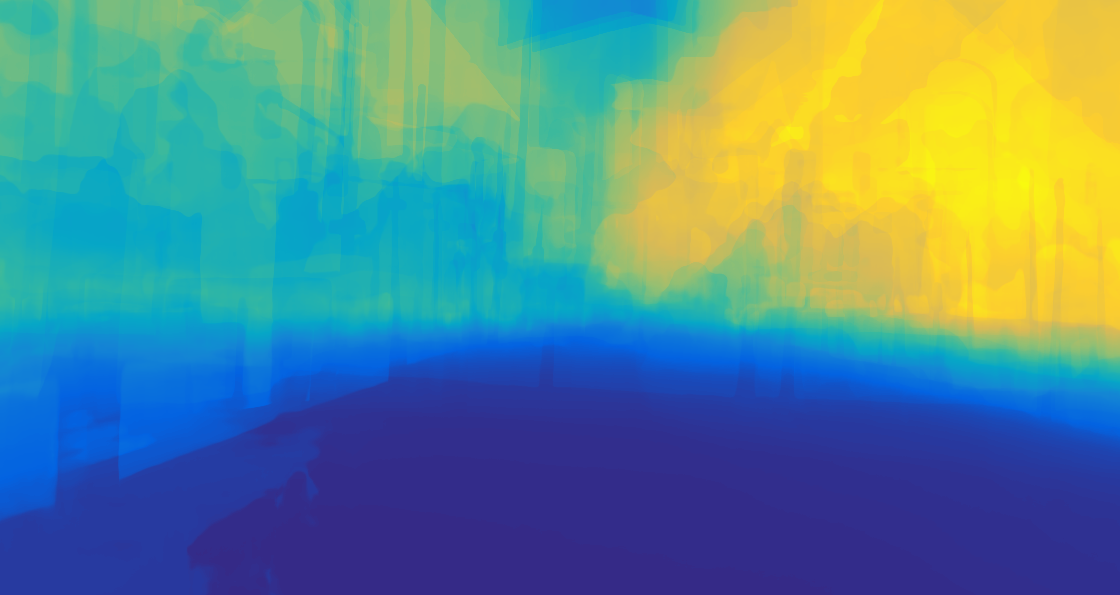

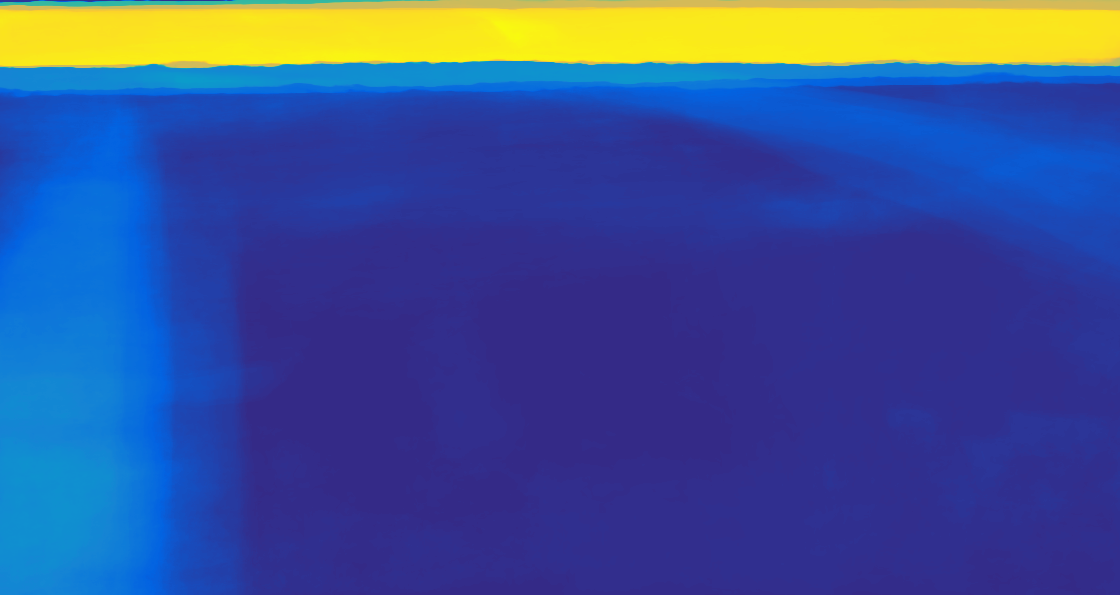

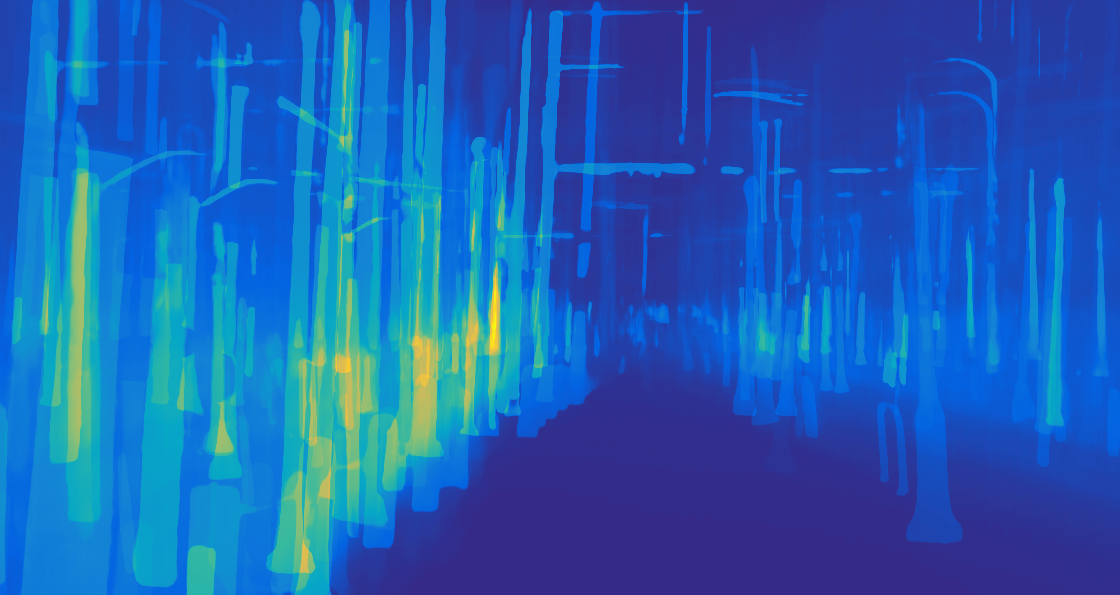

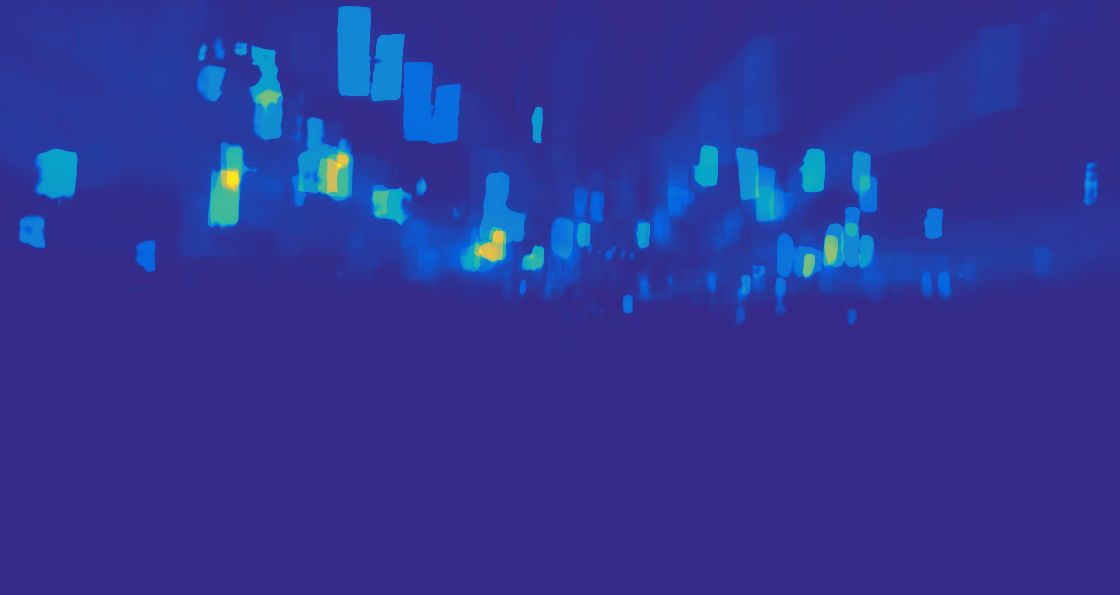

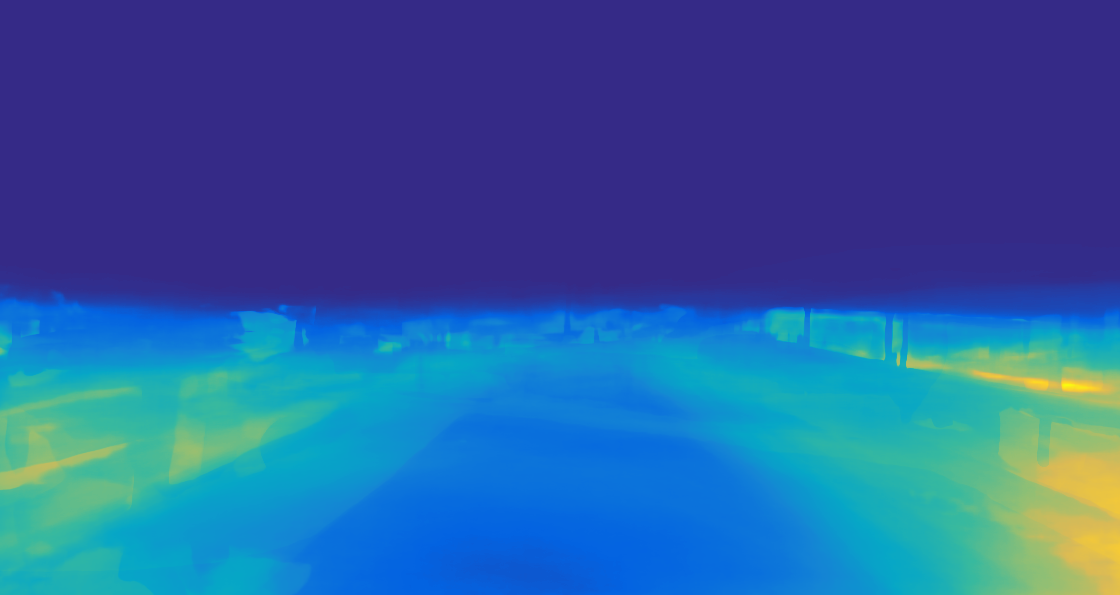

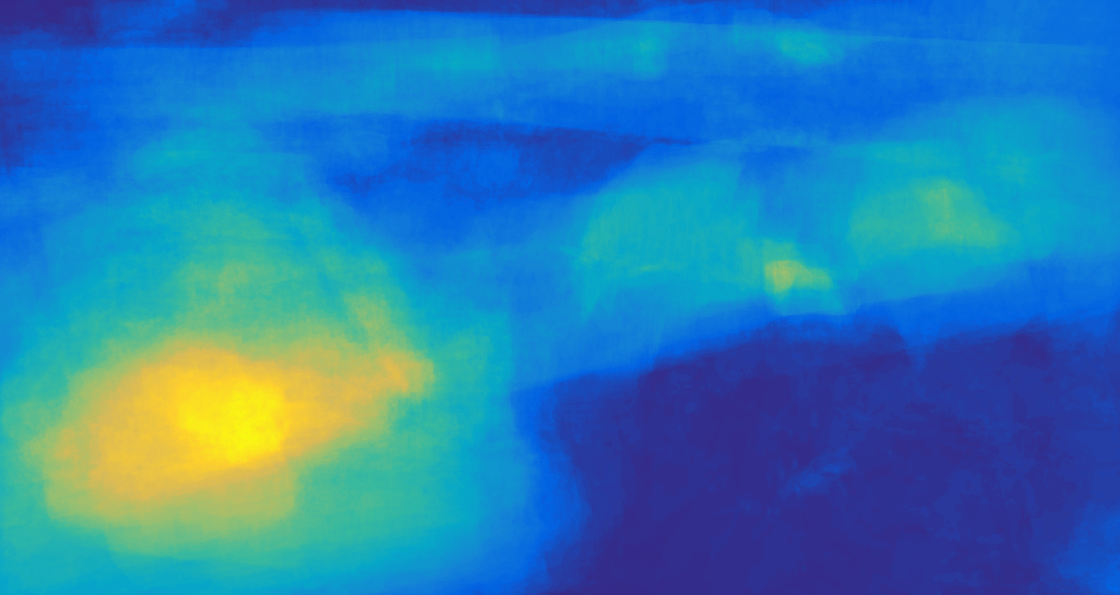

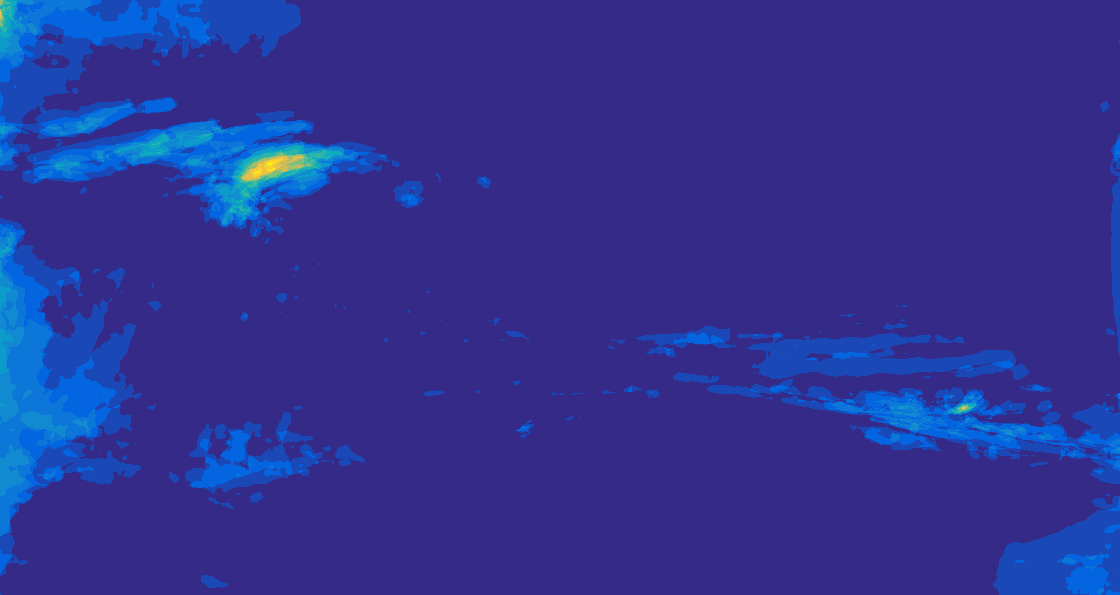

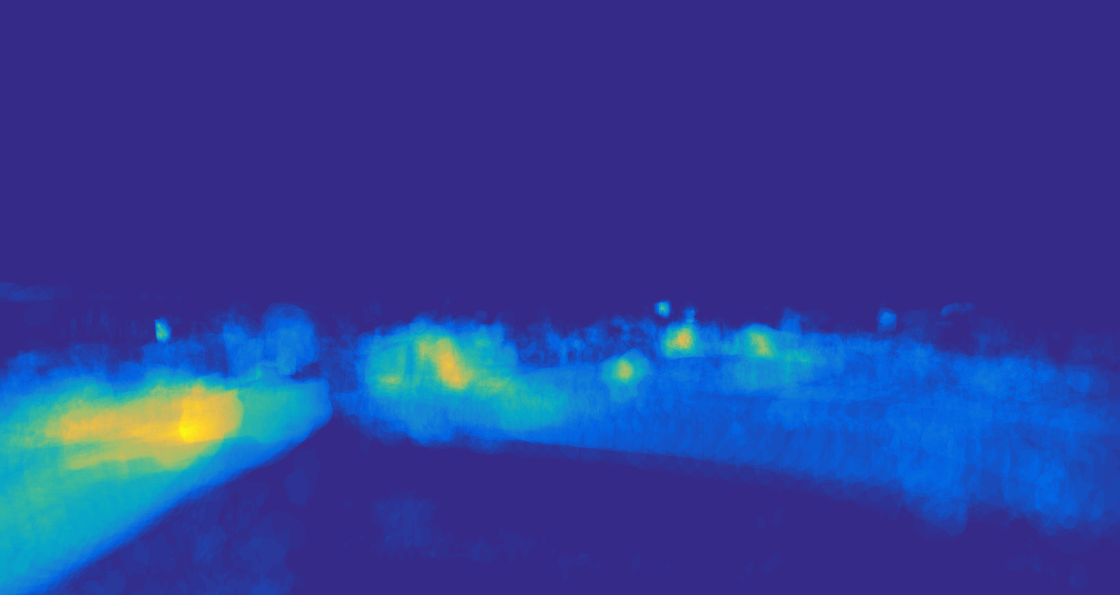

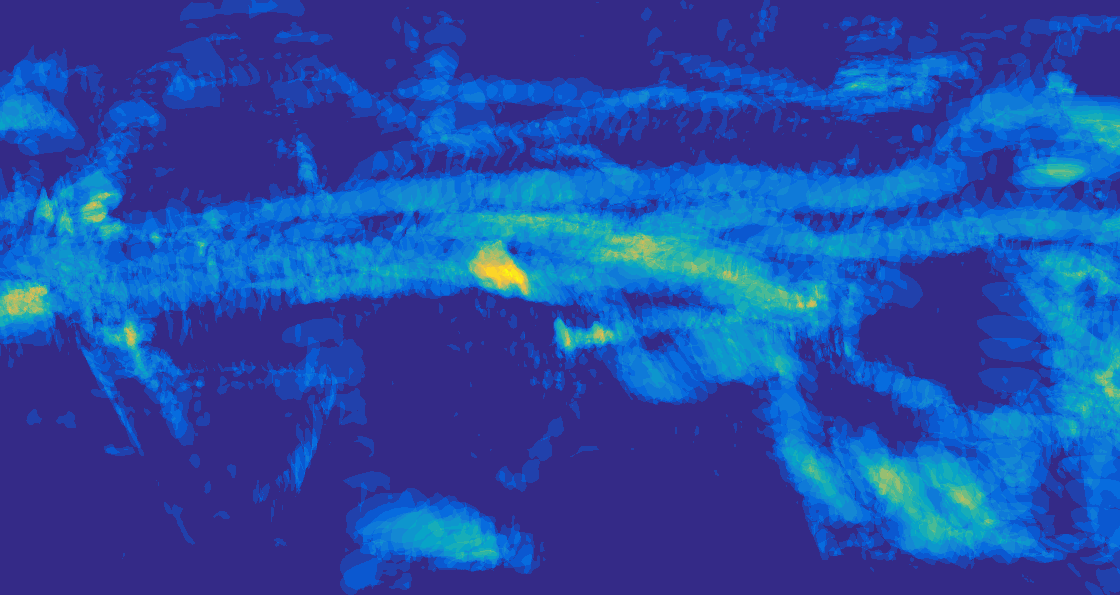

Quadruped (Pq) platforms.

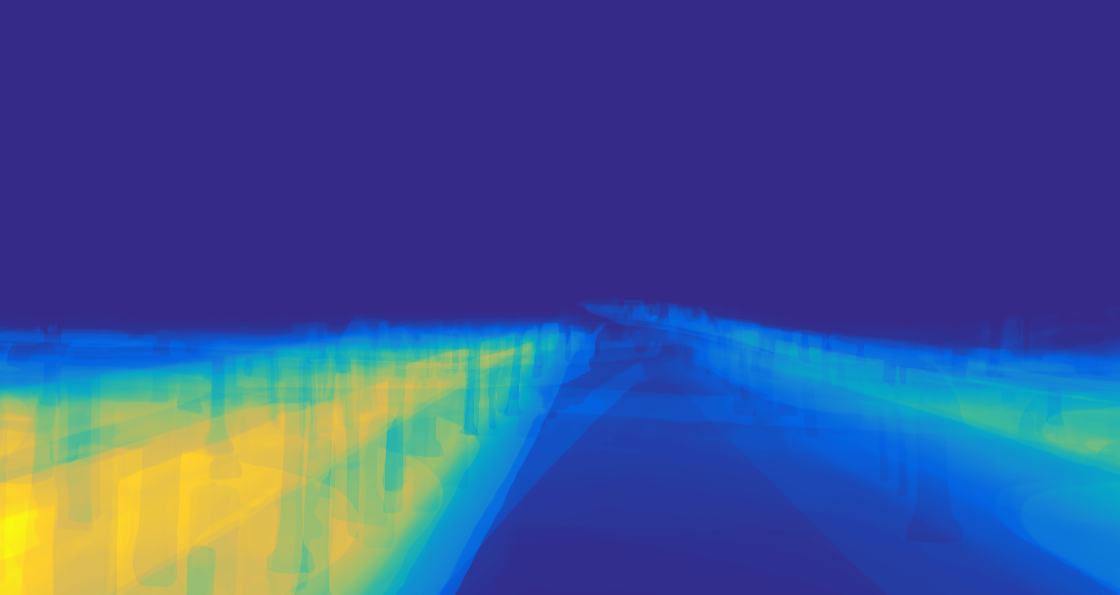

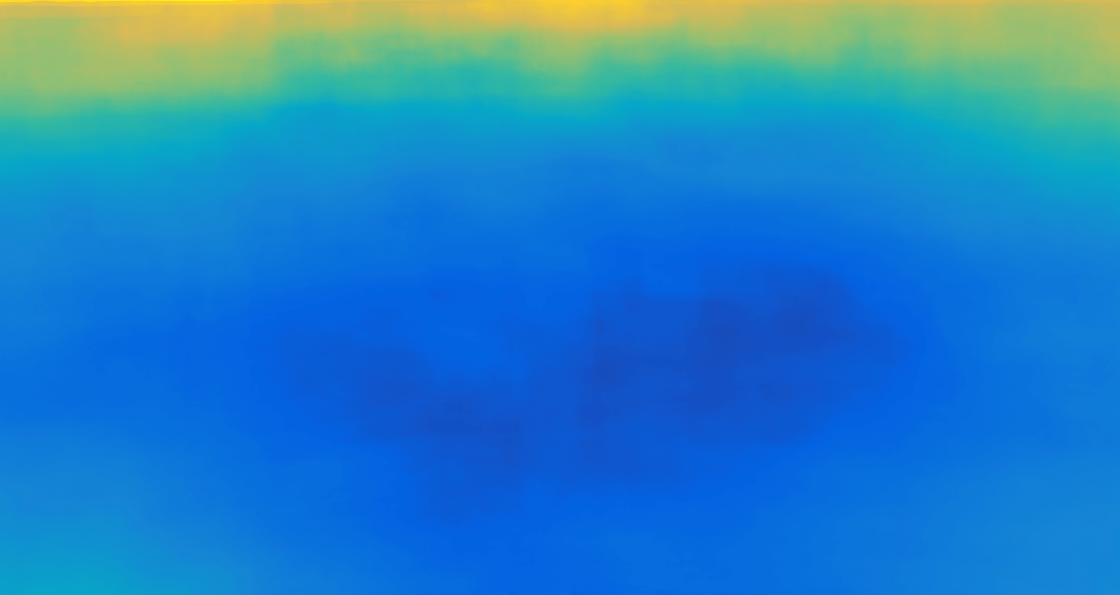

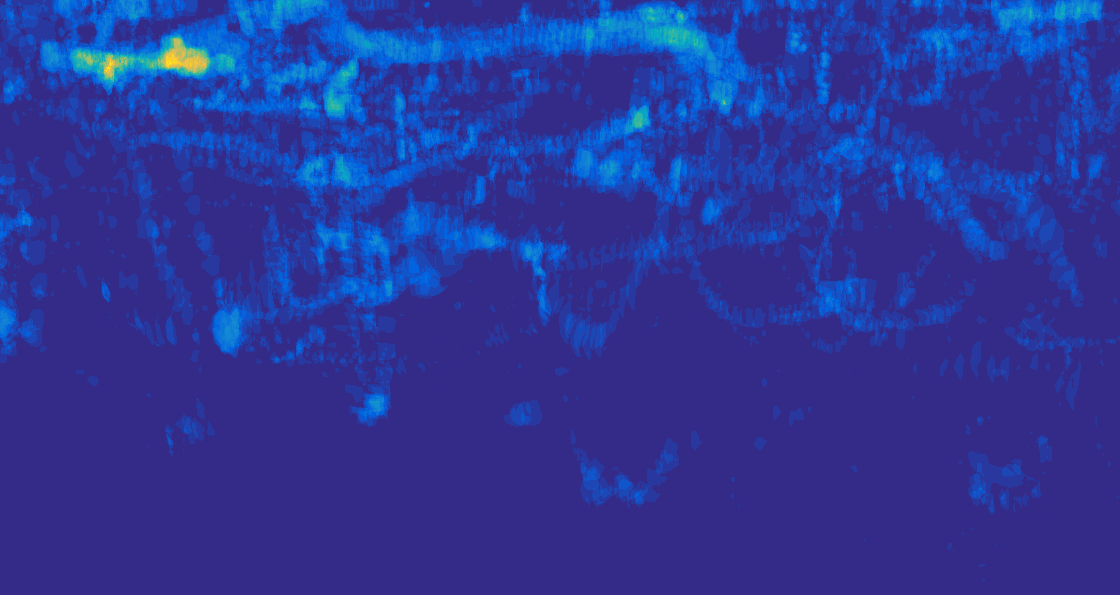

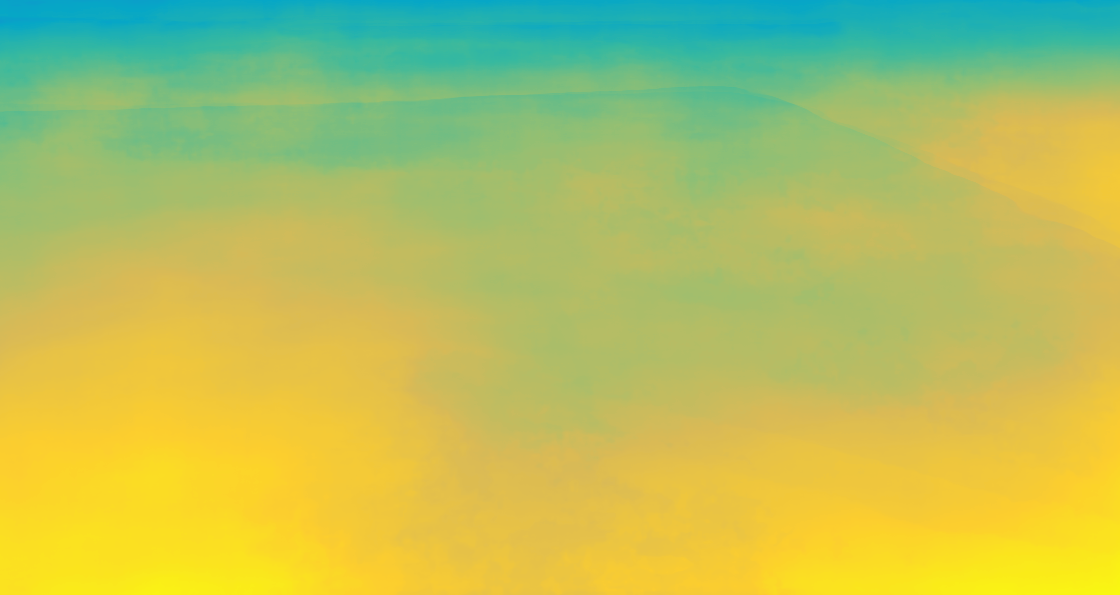

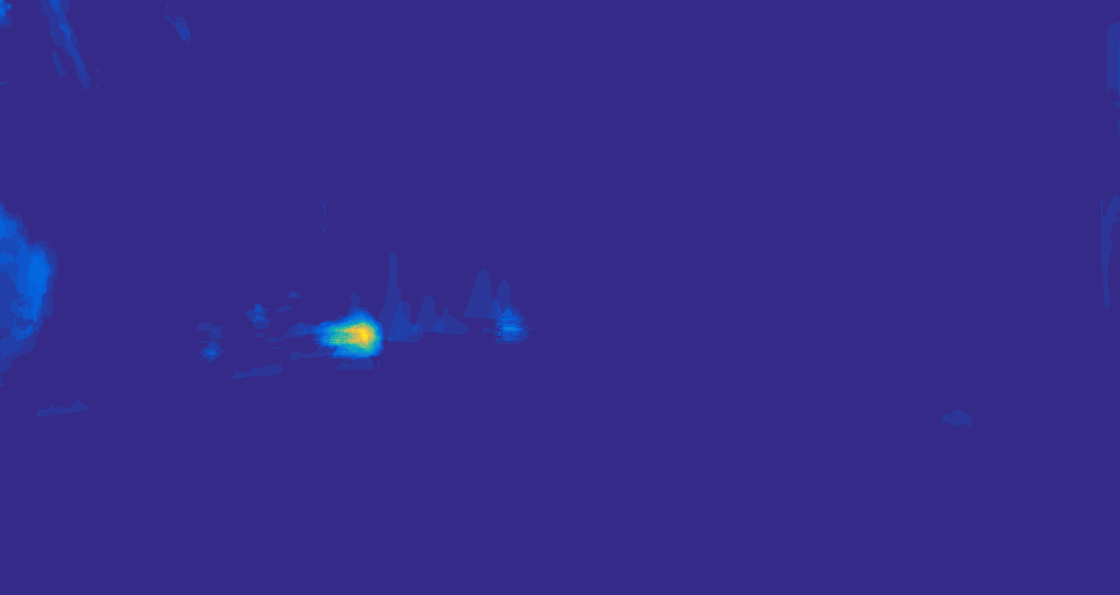

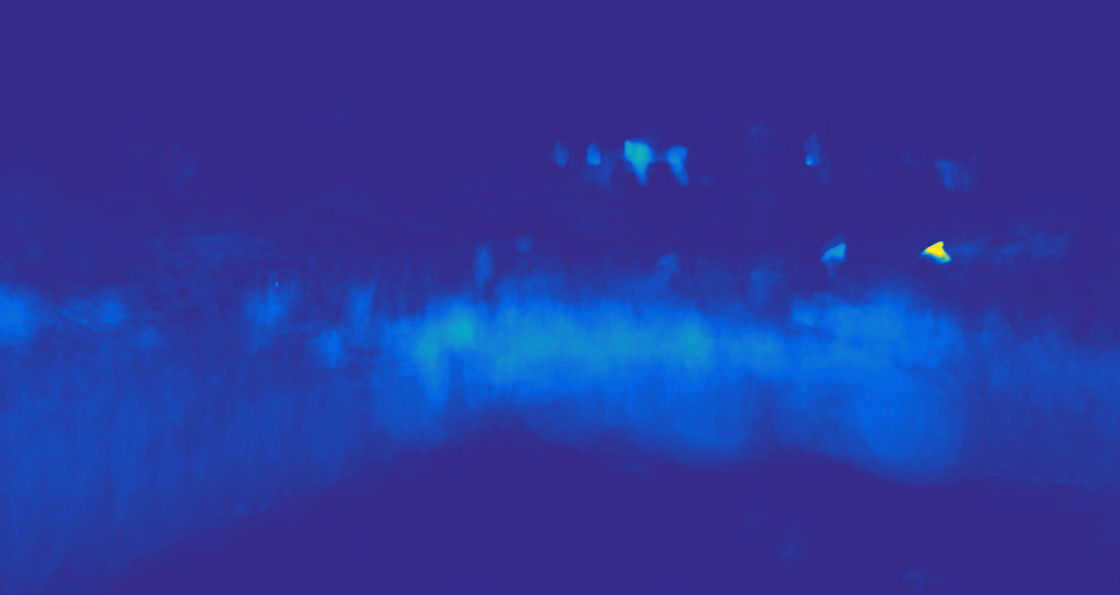

We compare the core attributes (viewpoint, speed, stability), event data distributions, and semantic distributions across event data acquired by three platforms, highlighting the challenges of adapting event camera perception to diverse operational contexts.

These variations motivate the need for a robust cross-platform adaptation framework to harmonize event-based dense perception across distinct environmental setups and conditions.

Quadruped (Pq) platforms.

We compare the core attributes (viewpoint, speed, stability), event data distributions, and semantic distributions across event data acquired by three platforms, highlighting the challenges of adapting event camera perception to diverse operational contexts.

These variations motivate the need for a robust cross-platform adaptation framework to harmonize event-based dense perception across distinct environmental setups and conditions.